10 Biggest Computer Vision Trends to Watch in 2025

In 2025, key computer vision trends include edge computing for faster processing, 3D models for detailed visualizations, and advanced data annotation for improved accuracy.

Computer vision is an interdisciplinary field that studies the interpretation and analysis of real-world video and picture data by computers. Thanks to developments in artificial intelligence and machine learning, it has experienced remarkable growth and development in recent years.

We can anticipate additional development and expansion of computer vision technology as we get closer to 2025, driven by the rising demand for automation and the expanding usage of visual data across a range of industries.

The top 10 computer vision trends that are predicted to have an impact on the market in 2025 will be discussed in this article.

The most intriguing and promising developments that will have an impact on the future of computer vision will be covered, including the rise of new applications of computer vision in manufacturing, retail, and healthcare, as well as the development of new hardware and algorithms.

This blog will give you insightful information about the computer vision trends 2025, whether you are a researcher, a developer, or simply interested in the newest technological advances.

1. Edge computing for faster, cheaper, and more efficient storage:

Edge computing refers to the practice of processing and keeping data closer to the source or end-user device, rather than transporting it to a centralized cloud server, enabling faster, less expensive, and more effective storage. This strategy can save bandwidth costs while increasing data processing speed and effectiveness. Edge computing in the context of computer vision can make it possible to make decisions and analyze images in real time without the need for expensive gear or a steady internet connection.

2. 3D models for more accurate and detailed visualizations:

3D modeling is a technology that is one of the most popular computer vision trends 2025 which permits the development of digital models of real-world locations or objects, allowing for more precise and in-depth visualizations. Applications for it can be found in a number of fields, including architecture, industry, and entertainment. 3D models can help computer vision specialists visualize objects more precisely and in detail, improving comprehension and analysis.

3. Data annotation capability for improved accuracy and efficiency:

The practice of labeling or identifying data so that machine learning algorithms may learn from it is known as data annotation. For training image recognition models in computer vision, data annotation is essential. The accuracy and effectiveness of machine learning algorithms can be enhanced using new tools and approaches for data annotation, resulting in more accurate outcomes and shorter development times.

4. Natural language processing for more intuitive and user-friendly interfaces:

The study of how computers can comprehend and interpret human language is known as natural language processing or NLP. NLP can make it possible for computer vision systems to have more intuitive and user-friendly user interfaces by enabling users to interact with images and video data using natural language instructions.

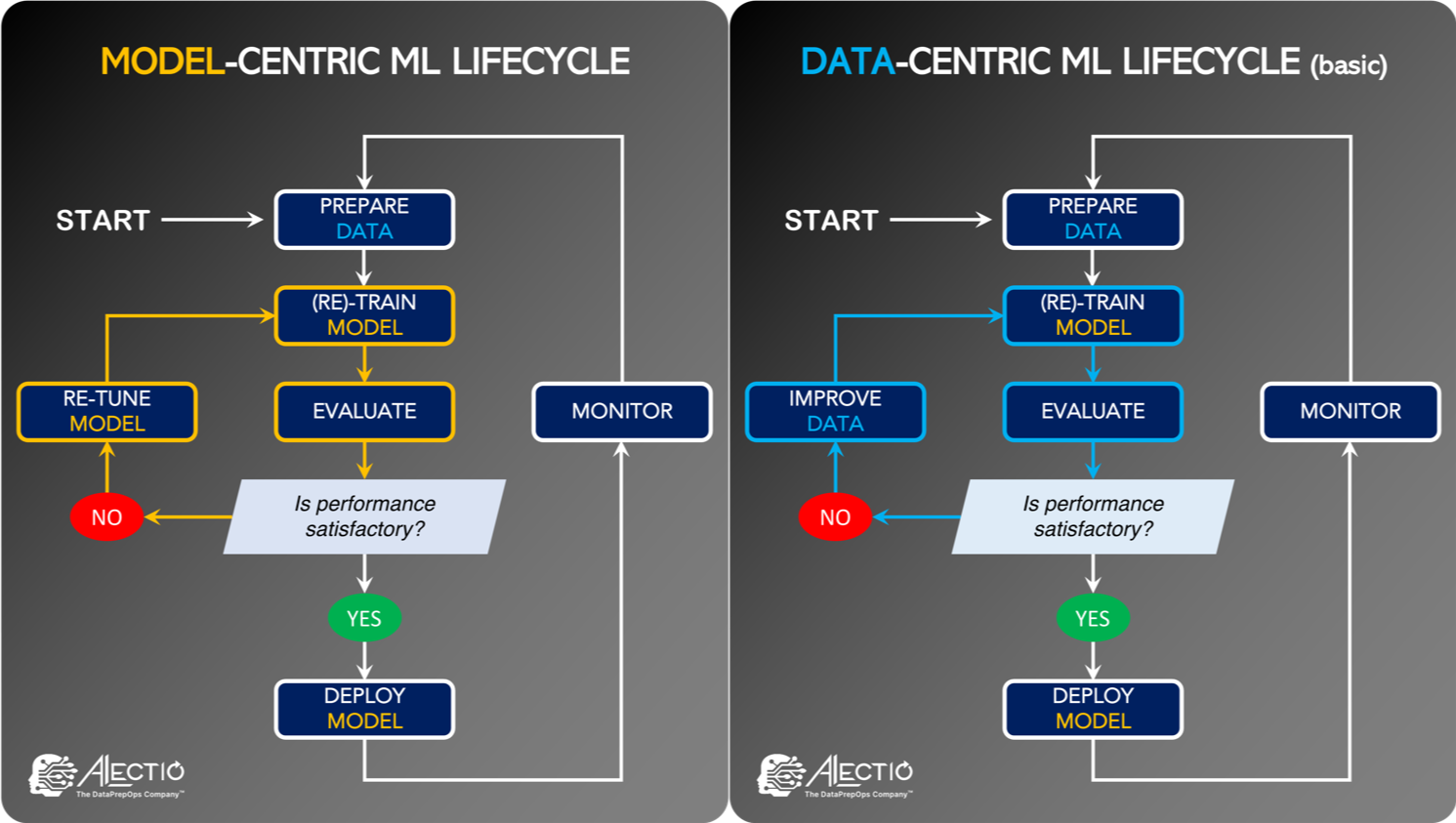

5. Model-centric to data-centric AI for more efficient and effective machine learning:

Model-centric machine learning traditionally has put an emphasis on creating and refining algorithms. However, there is a tendency towards data-centric machine learning that highlights the significance of using diverse and high-quality data for model training. This method can improve the understanding and interpretation of visual data as well as the efficiency and effectiveness of machine learning.

6. Generative artificial intelligence for more creative and innovative applications:

Artificial intelligence (AI) systems that can produce new ideas or content from pre-existing data are referred to as generative AI (GAI). GAI can enable creative and cutting-edge applications in computer vision, like style transfer or the synthesis of images and videos.

7. The Metaverse for immersive and interactive experiences:

The phrase "metaverse" refers to a shared virtual environment where users can engage in completely immersive and interactive interactions with each other and digital content. The development of the Metaverse may be made possible by computer vision technology, opening up a variety of novel and interesting uses in entertainment, education, and other fields.

8. Medical imaging for improved diagnosis and treatment:

When diagnosing and treating medical diseases, medical imaging uses imaging technologies like X-rays, CT scans, and MRIs. Medical imaging can be made more accurate and effective with the help of computer vision, leading to better diagnosis and treatment.

9. Facial recognition and surveillance for enhanced security and safety:

A person's identification can be recognized and verified using facial traits thanks to a technique called facial recognition. It can be used in many different fields, including security and surveillance. Improved security and safety can result from more precise and effective facial identification made possible by computer vision.

10. Falling computer vision costs are driven by higher computational efficiency, decreasing hardware costs, and new technologies:

Lastly, it is anticipated that the cost of computer vision technologies will keep declining due to improvements in processing efficiency, declining hardware costs, and emerging technologies like edge computing. It is anticipated that this trend will increase access to and affordability of Computer Vision, spurring additional innovation and development.

Conclusion

In conclusion, new trends and technological advancements are constantly appearing in the field of computer vision, which is progressing quickly. There are numerous fascinating computer vision trends 2025 to watch for in the upcoming years, ranging from edge computing and 3D modeling to NLP and GAI.

These developments could revolutionize a number of sectors, including entertainment, healthcare, and security. We can anticipate much more innovation and advancement in computer vision as costs continue to decline, bringing us closer to a time when visual data is processed and analyzed in real-time with unmatched precision and effectiveness.

To stay ahead in the competition, follow the latest computer vision trend with labellerr!

FAQs

- How to stay updated with the latest computer vision trends?

By reading trade periodicals, going to conferences, and keeping an eye on new businesses in the area, you can keep up with these changes. You can also think to consider enrolling in online classes or coding boot camps to learn more about these technologies.

2. Why are computer vision trends significant?

A variety of industries, including entertainment, healthcare, and security, stand to benefit from these developments, making them crucial. They can increase security, save costs, and improve user experience. Investments in sustainable technology can also improve operational resilience and financial performance while opening up new growth opportunities.

3. What is Edge Computing?

To speed up reaction times and conserve bandwidth, edge computing is a distributed computing paradigm that moves processing and data storage closer to the point of demand. Edge computing can aid in lowering latency and enhancing the speed and efficiency of image processing in the context of computer vision.

4. What are 3D models in computer vision?

A three-dimensional model is a computerized depiction of an object or environment in three dimensions. A range of applications, including virtual reality, gaming, and architecture, can benefit from the usage of 3D models in computer vision to provide more precise and detailed visualizations.

5. What is merged reality?

The combination of real life with virtual reality is referred to as merged reality. Merged reality is a technique used in computer vision to provide immersive experiences that incorporate both real-world and virtual objects and surroundings.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)