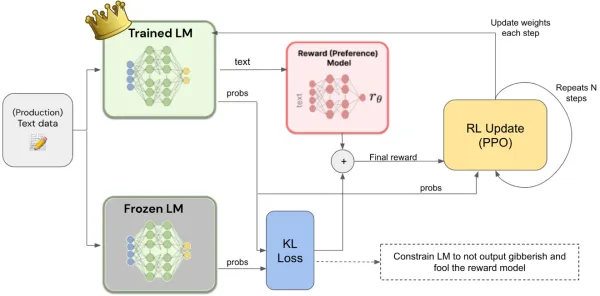

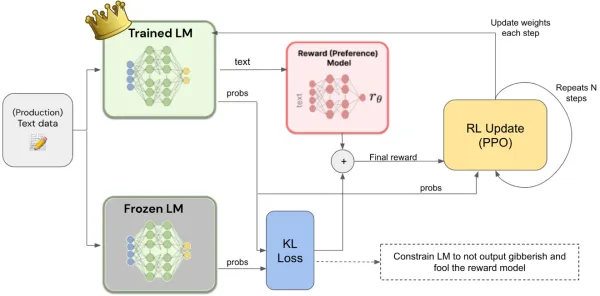

Reinforcement Learning from Human Feedback (RLHF) is a technique used in machine learning, specifically in the training of models to incorporate human input and feedback throughout the learning process.

This approach is particularly beneficial for Large Language Models (LLMs) that may be challenging to train using traditional supervised learning methods.