Labellerr Integration with Google VertexAI For A Seamless Data Annotation

Introduction

In the dynamic realm of machine learning and artificial intelligence, image annotation stands as a cornerstone in the development of robust and accurate models. In this complex domain, where every pixel holds significance, Labellerr emerges as a beacon of innovation with its diverse suite of annotation tools.

Tailored to meet the diverse needs of organizations, Labellerr goes beyond being a mere annotation platform – it is a comprehensive solution, offering a sophisticated array of features that not only streamline the annotation workflow but also foster unparalleled efficiency and collaboration among teams.

Whether it's the intricacies of manual annotation or the precision of automated processes, Labellerr provides a dynamic platform that adapts to the unique requirements of each annotation task, ensuring a seamless and effective journey from raw images to annotated datasets.

Understanding Google VertexAI

Google VertexAI stands as an indomitable force in the technological landscape, seamlessly harmonizing the intricate realms of data engineering, data science, and ML engineering workflows. Serving as a multifaceted platform, it not only caters to the individual needs of each discipline but also acts as a unifying force, fostering collaboration among diverse teams.

The integration of a common tool set empowers teams to transcend silos, creating an environment where the collective intelligence of data engineers, data scientists, and ML engineers converges for optimal results. As organizations embark on their digital transformation journey, VertexAI becomes the cornerstone for scaling applications, leveraging the full spectrum of benefits offered by the expansive Google Cloud ecosystem.

While VertexAI undoubtedly boasts remarkable capabilities, it does present challenges, particularly in the domain of manual image annotation, a crucial aspect in tasks such as Image Object Detection. In this intricate process, the existing tools for manual annotation may fall short of the sophistication needed to meet the high standards of precision and efficiency demanded by modern machine learning endeavors.

Empowering Choices: AutoML and Custom Training in Vertex AI

Under vertex AI, there are 2 options for model training namely AutoML and Custom Training. Let's understand what both these options are about in brief:

1. AutoML - Simplifying Model Training without Code : AutoML or Automated Machine Learning, is a powerful tool that democratizes the machine learning (ML) process by enabling individuals without extensive coding or data science expertise to train models effectively. This feature is versatile, accommodating various data types such as tabular, image, text, or video data.

What sets AutoML apart is its ability to streamline the entire process without necessitating users to write complex code or prepare intricate data splits. This means that individuals with domain knowledge but limited programming skills can harness the power of ML, making it accessible to a broader audience. AutoML platforms typically incorporate sophisticated algorithms that automate tasks like feature engineering, model selection, and hyper-parameter tuning.

These systems optimize the model for performance, allowing users to focus on the problem at hand rather than grappling with the technical intricacies of machine learning. The convenience and efficiency offered by AutoML make it an excellent choice for quick prototyping, exploring different datasets, or getting started with machine learning without an extensive technical background.

2. Custom Training - Fine-Tuning for Maximum Control : On the other end of the spectrum, custom training provides a more hands-on and granular approach to machine learning. This method empowers users with complete control over the training process. With custom training, individuals can write their own code, select their preferred machine learning framework (such as TensorFlow, PyTorch, or scikit-learn) and fine-tune hyper-parameters to suit the specific nuances of their problem domain.

This level of control is invaluable for experienced data scientists and machine learning engineers who require precise adjustments or want to implement novel algorithms. Custom training is particularly advantageous when working with unique datasets, specialized tasks, or cutting-edge research where pre-packaged solutions may not be sufficient. It allows for intricate model architecture designs, custom loss functions, and other advanced techniques that might be essential for solving complex problems.

While it demands a deeper understanding of machine learning concepts and programming, custom training is the go-to choice for professionals seeking to push the boundaries of what's possible in the field.

As we delve into the practicalities of AutoML's Image Object Detection in the next section, we'll explore the step-by-step process of leveraging its capabilities. From data preparation to model deployment, we'll guide you through the intricacies of using AutoML to harness the power of machine learning without the need for extensive coding or domain-specific knowledge.

Steps to Create Image Object Detection Project

This following steps outlines the steps for creating, importing, and training an Image Object Detection model using Google VertexAI. These steps also walks through model training, emphasizing key details like early stopping and incremental training. Users can also use an existing model as a base for further training by importing new data :

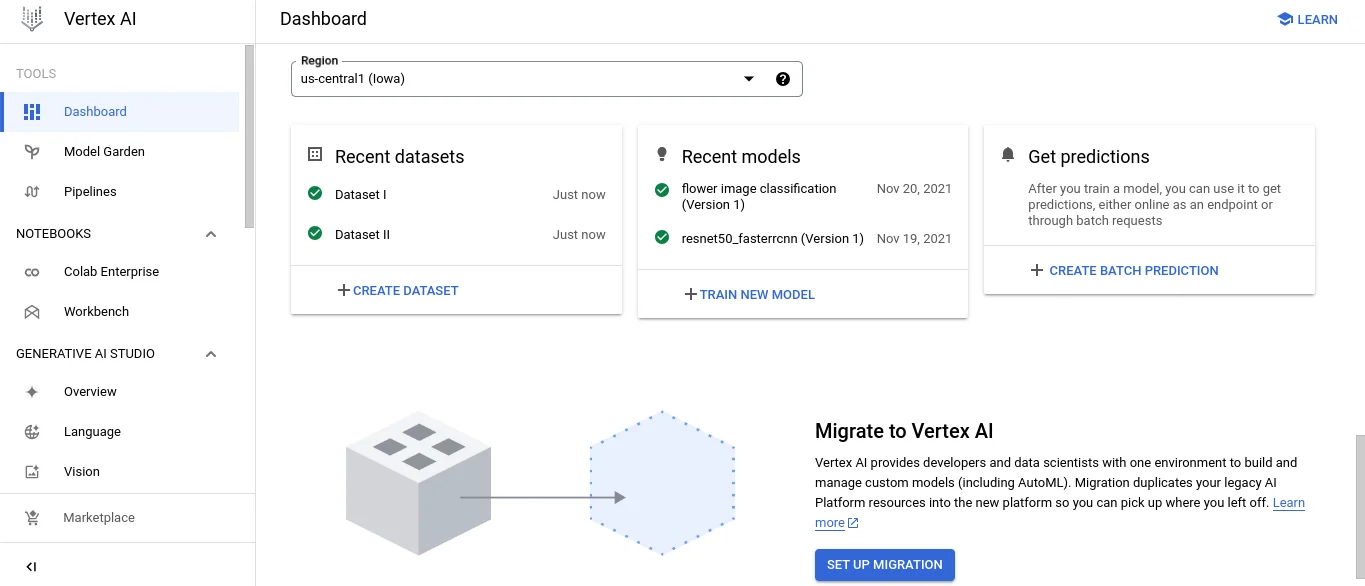

1. Scroll down the Google VertexAI dashboard and click on the Create Dataset Button.

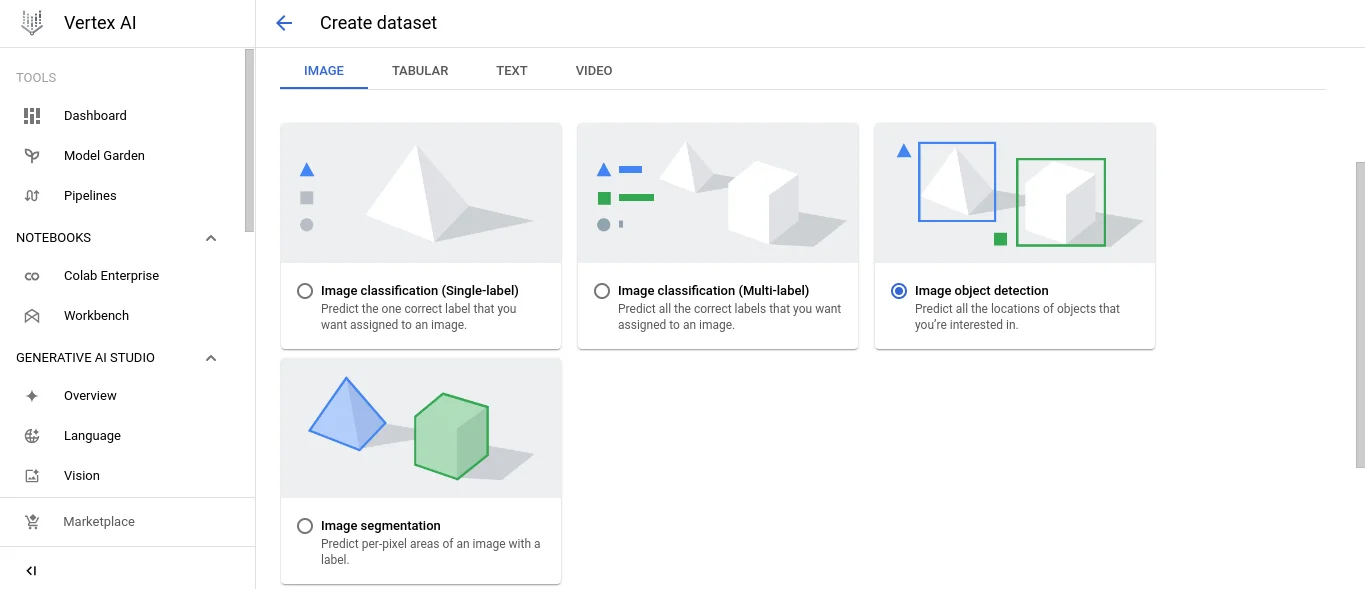

2. Name your dataset and choose Image Object Detection from the options available.

3. Select the Region from the drop down and click on Create.

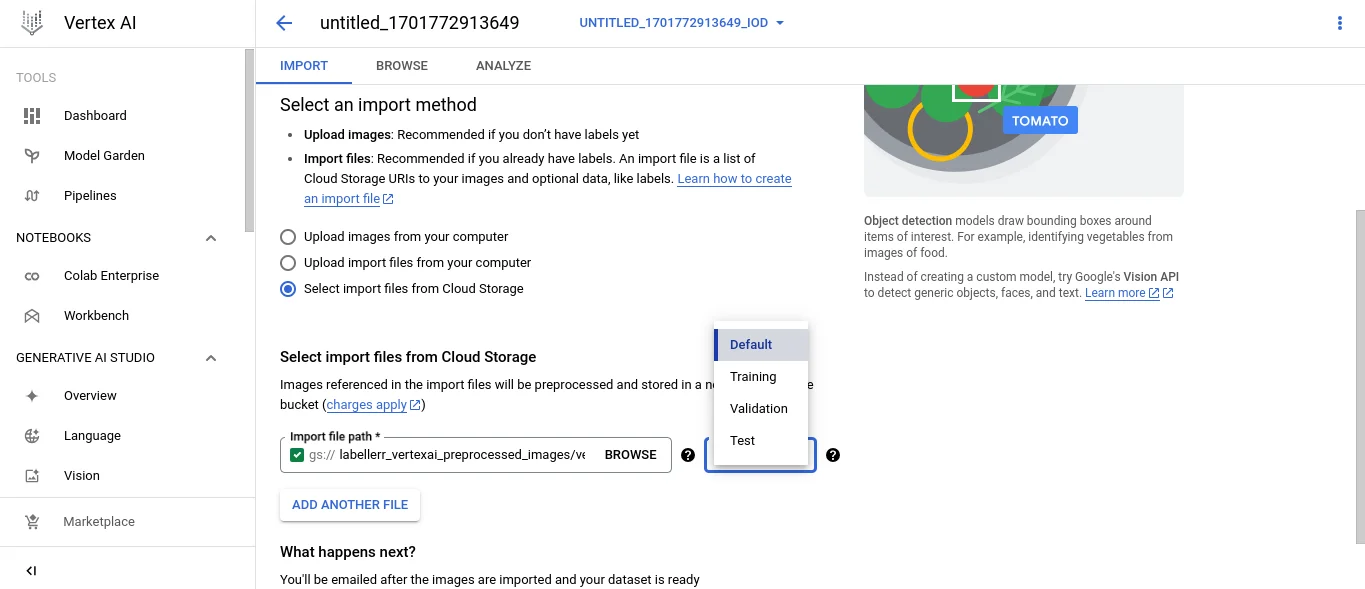

4. Choose the option via which you want to import your files from. Let's say you have choose to import files from Cloud Storage then specify the Cloud Storage URI of the CSV file with the image location and label data. Make sure the CSV and the images are in the same bucket. Once done choose the Default Split.

5. Click on the Continue button to import the dataset. This might take a few minutes.

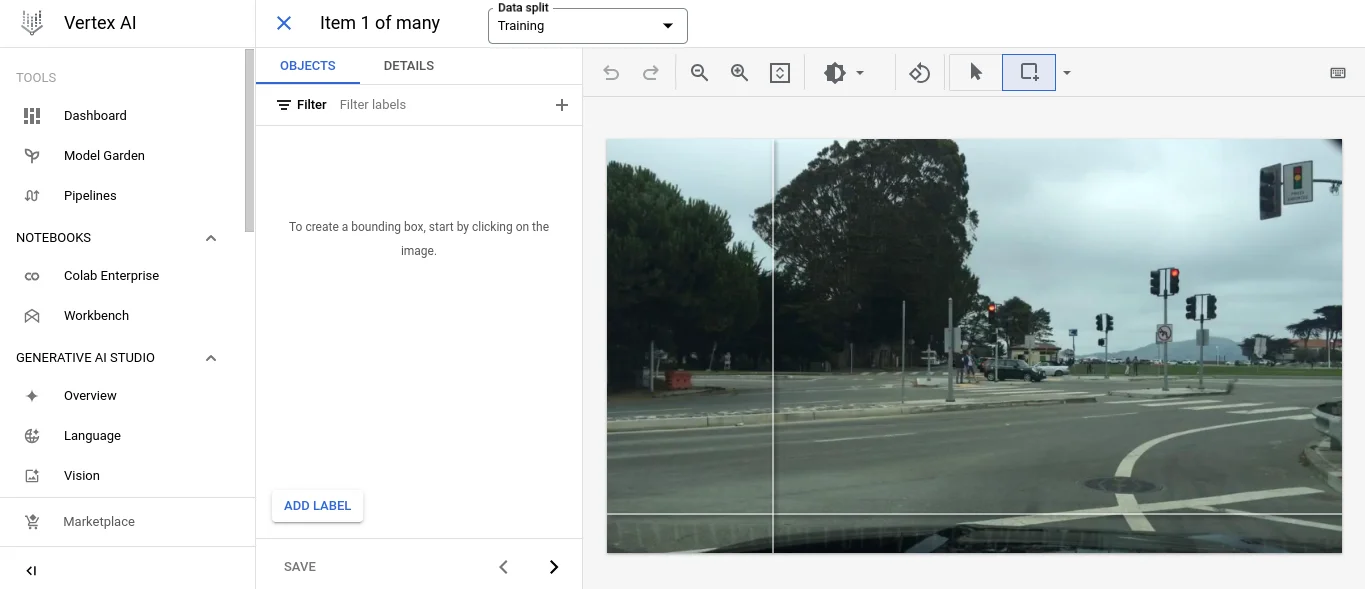

6. Once the Data is Imported you need to Annotate the Images Manually if not already and update the Data Split if you need to.

7. Then the next step is to train your model for which click on Train New Model if the dataset has been imported and annotated correctly.

8. Enter details and put in node hours. Enable Early stopping. Select the checkbox for incremental training. And once the model has been trained, go to evaluate to see the results.

9. Optional : After you are done training your model, you can treat this existing model as the base model and train more models using this base model by modifying the dataset. In order to do so, import new data in the same dataset. Click on train and select incremental training and then select the base model you want to choose. Go ahead and train the model like before after this step.

The Limitation: Manual Annotation in Google VertexAI

A significant bottleneck in Google VertexAI lies in the manual annotation of images. The existing labeling interface, while functional, is identified by its lack of sophistication, resulting in a workflow that can be both laborious and time-consuming. This limitation becomes particularly pronounced when users aim for a heightened level of precision, especially in intricate tasks such as Object Detection or when annotating a large volume of images manually becomes cumbersome leading to fatigue for the manual annotator.

As the demands for accuracy and efficiency in machine learning workflows continue to rise, the need for an enhanced manual annotation interface becomes evident. Addressing this limitation would not only alleviate the current challenges faced by users but also contribute to a more seamless and productive image annotation process within the broader framework of Google VertexAI.

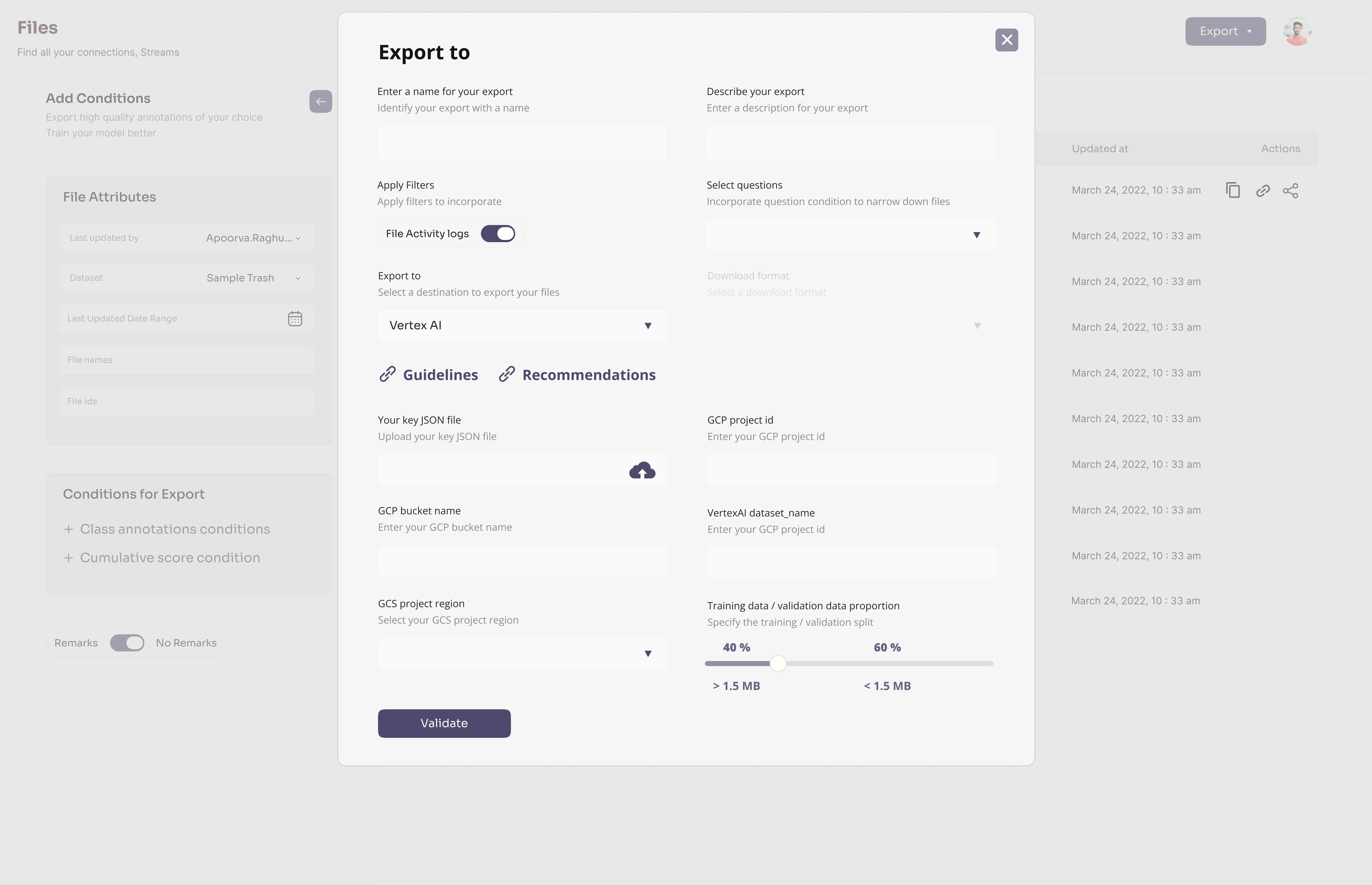

Labellerr's Innovative Solution: Export to Google VertexAI

Acknowledging this limitation, Labellerr introduces a groundbreaking feature – "Export to Google VertexAI." This feature acts as a bridge between Labellerr's advanced annotation capabilities and Google VertexAI's robust machine learning environment.

Key Features of Labellerr's Export to Google VertexAI:

1. Seamless Data Transfer: Labellerr's feature allows users to export annotated images directly from Labellerr to Google VertexAI with just one click. This streamlines the data transfer process, eliminating the need for manual uploads and reducing the risk of errors.

2. Collaborative Annotation: Labellerr's legacy tool and LabelGPT, its automated solution, provide users with versatile annotation options. Whether annotations are performed manually, collaboratively, or through automation, Labellerr ensures a unified export process to VertexAI.

3. Hybrid Foundation Models: LabelGPT, Labellerr's state-of-the-art automated annotation tool, employs hybrid Foundation models, enabling zero-shot annotation features for various image types. This innovative approach enhances efficiency and accuracy in the annotation process.

4. Privacy Assurance: Labellerr prioritizes data privacy. By storing all annotated images in the user's Google Cloud Platform ( GCP ) account, Labellerr ensures complete confidentiality and control over the data.

How to Export Annotated Images

Labellerr's user-friendly interface makes the export process to Google VertexAI straightforward. Users can initiate the export with a single click, selecting the desired dataset and seamlessly integrating their annotated images into Google VertexAI's environment. Refer to this User Manual to learn more.

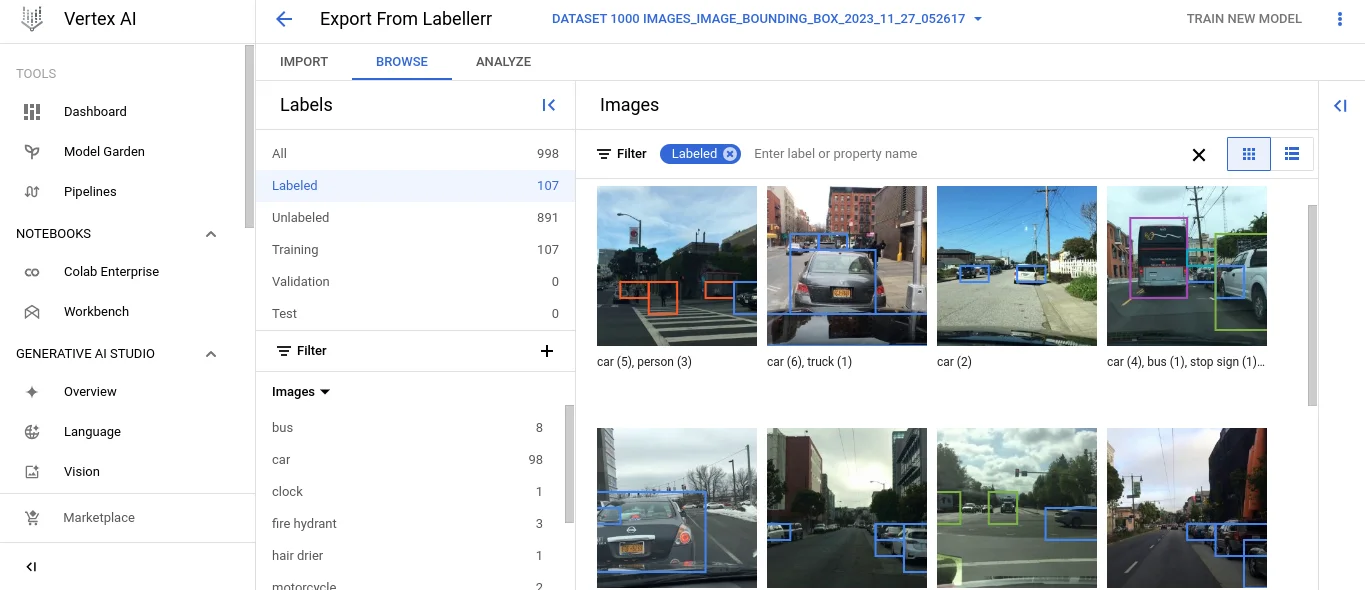

The Result in Google VertexAI

Upon successful export, users can witness their annotated images within Google VertexAI, ready to be leveraged for training and model development. Labellerr's feature ensures that all necessary validation checks are performed, guaranteeing the accuracy and reliability of the annotated data.

Conclusion

Labellerr's "Export to Google VertexAI" feature signifies a monumental leap in the domain of image annotation. By addressing the limitations of manual annotation in VertexAI, Labellerr establishes itself as an indispensable component of the data pipeline. This integration not only enhances efficiency but also contributes to elevating the quality and precision of machine learning models.

In a world where collaboration, automation, and privacy are paramount, Labellerr's innovative solution paves the way for a seamless and secure image annotation experience within the Google VertexAI ecosystem. The synergy between Labellerr and VertexAI promises to redefine the landscape of image annotation and machine learning.

Take the next step in advancing your machine learning initiatives. Explore the possibilities with Labellerr today and experience a revolution in image annotation. Your journey towards elevated efficiency, precision, and collaboration awaits – seize it now. Click Here

Book our demo with one of our product specialist

Book a Demo