How RobustSAM Helps With Blurry/Degraded Image Segmentation

Table of Contents

- Introduction

- Introduction to RobustSAM

- Architecture of RobustSAM

- Results and Performance of RobustSAM

- Applications Of RobustSAM

- Conclusion

- FAQ

Introduction

In the field of computer vision, image segmentation stands as a crucial task. It involves partitioning an image into multiple segments or regions, typically to simplify the representation of an image and make it more meaningful and easier to analyze.

Segmentation helps in identifying objects, boundaries, and various other elements within an image. This process is foundational for numerous applications including medical imaging, autonomous vehicles, surveillance, and image editing.

Overview of Image Segmentation

Image segmentation is the process of dividing an image into distinct regions, each representing a different object or part of an object.

The main goal is to assign a label to every pixel in the image such that pixels with the same label share certain visual characteristics. There are various techniques for image segmentation, ranging from classical methods like thresholding and edge detection to more advanced approaches using deep learning.

In computer vision, image segmentation is vital because it facilitates the understanding and interpretation of image content. For example, in medical imaging, segmentation helps in annotating anatomical structures and identifying pathological regions. In autonomous driving, it aids in recognizing and locating road signs, pedestrians, and other vehicles, ensuring safe navigation.

Challenges with Degraded Images

Despite the advancements in segmentation techniques, segmenting degraded images remains a significant challenge. Degraded images can suffer from various issues such as noise, blur, low resolution, and compression artifacts. These degradations can occur due to various reasons like poor lighting conditions, motion, low-quality sensors, or transmission errors.

- Noise: Random variations of brightness or color information in images can obscure important details and boundaries, making it hard to segment objects accurately.

- Blur: Motion blur or de-focus blur can merge boundaries and create ambiguity in object edges.

- Low Resolution: Limited pixel information in low-resolution images can lead to a loss of detail, making fine structures difficult to distinguish.

- Compression Artifacts: Compression, especially lossy compression, can introduce artifacts that distort the true appearance of objects.

These challenges make it difficult for traditional segmentation models to perform effectively, as they are often designed and trained on high-quality images. The performance of these models typically degrades when they encounter images with the aforementioned issues.

Introduction to RobustSAM

To address these challenges, RobustSAM (Robust Segment Anything Model) has been developed. RobustSAM is a cutting-edge model designed to segment anything robustly, even in the presence of degraded image conditions.

Leveraging advanced deep learning techniques and innovative architectural modifications, RobustSAM aims to maintain high segmentation accuracy across a wide range of image qualities.

RobustSAM stands out by incorporating several key features:

- Enhanced Noise Resilience: By using sophisticated noise reduction techniques and robust feature extraction, RobustSAM can accurately segment images even when they are contaminated with significant noise.

- Blur Handling Capabilities: The model includes components specifically designed to manage and correct blur, allowing it to delineate object boundaries that would otherwise be merged.

- Adaptability to Low Resolution: Through advanced upscaling and resolution enhancement techniques, RobustSAM can effectively handle low-resolution images, preserving detail and ensuring accurate segmentation.

- Artifact Mitigation: RobustSAM employs methods to detect and mitigate compression artifacts, ensuring that these do not interfere with the segmentation process.

Architecture of RobustSAM

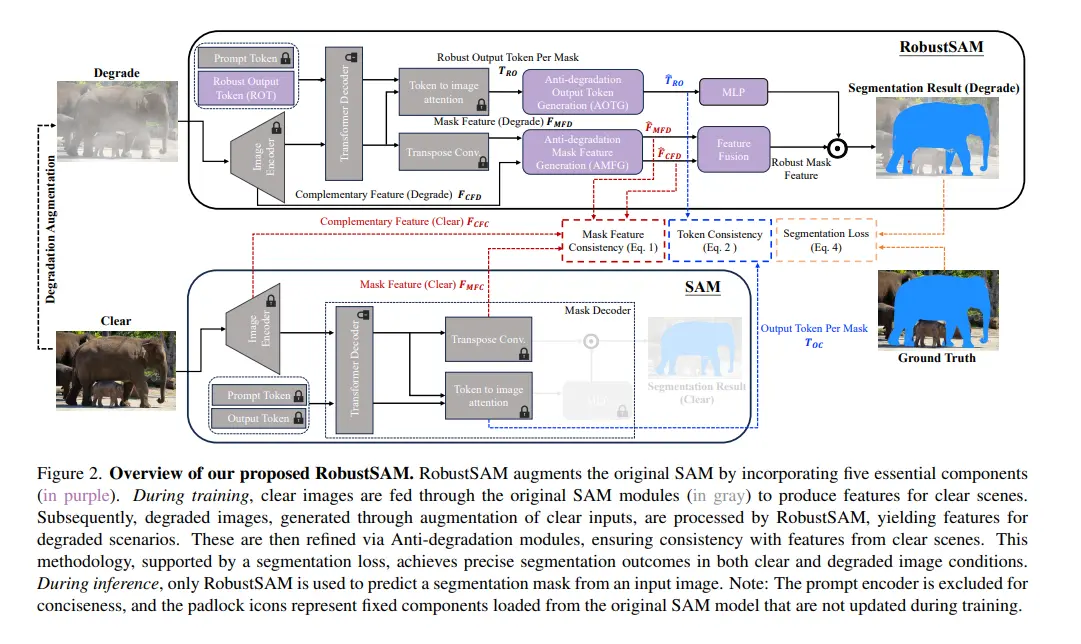

The architecture of RobustSAM is designed to enhance the Segment Anything Model (SAM) by incorporating specialized modules that address various types of image degradation. The figure illustrates how RobustSAM processes both clear and degraded images to produce robust segmentation results.

1. Degradation Augmentation:

During training, clear images are augmented to create degraded versions. These augmentations simulate various types of degradation, such as noise, blur, low resolution, and compression artifacts.

2. Image Encoder:

Both clear and degraded images are fed into an Image Encoder. This encoder is part of the original SAM and is used to extract preliminary features from the images.

3. Token Generation:

For clear images, the original SAM generates Prompt Tokens and Output Tokens, which are then processed to produce segmentation masks.

RobustSAM introduces the Robust Output Token (ROT), generated by the Anti-Degradation Output Token Generation (AOTG) module for degraded images.

4. Anti-Degradation Output Token Generation (AOTG):

The AOTG module processes the degraded image features to produce the Robust Output Token (ROT). This token is designed to maintain consistency with the clear image features, ensuring robust segmentation despite degradation.

This module includes a mechanism to compare features from clear and degraded images, enforcing token consistency.

5. Anti-Degradation Mask Feature Generation (AMFG):

The AMFG module generates degradation-invariant mask features. These features are aligned with those extracted from clear images, ensuring that the segmentation mask remains accurate even when the input image is degraded.

Mask Feature Consistency (MFC) and Token Consistency (TC) losses are applied to maintain this alignment.

6. Transformer Decoder:

Both clear and degraded image features pass through a Transformer Decoder, which further refines the features.

For degraded images, the Transformer Decoder is modified to work with the ROT and the robust mask features generated by the AMFG module.

7. Feature Fusion and MLP:

RobustSAM includes a Feature Fusion module and a Multi-Layer Perceptron (MLP) to combine the robust features and produce the final segmentation mask.

This combination ensures that the final output maintains high accuracy, even under degraded conditions.

8. Segmentation Results:

RobustSAM produces robust segmentation results for degraded images, while the original SAM produces results for clear images.

The entire training process ensures that the model learns to produce consistent and accurate segmentation masks across varying image qualities.

Results and Performance of RobustSAM

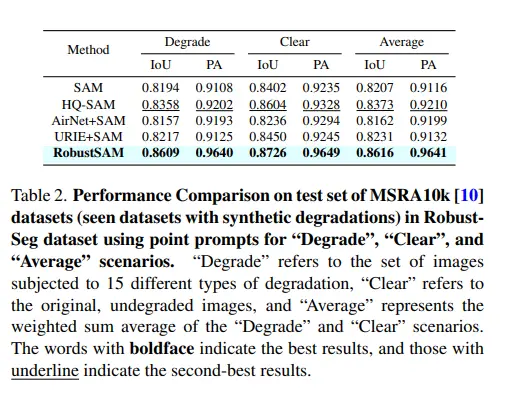

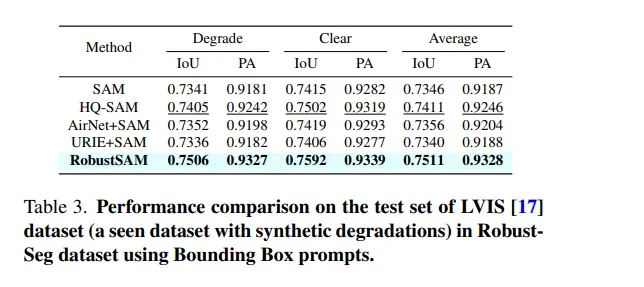

RobustSAM has been rigorously tested and benchmarked against several state-of-the-art segmentation models to demonstrate its superior performance. Key datasets used in the benchmarking include MSRA10K, LVIS, NDD20, STREETS, FSS-1000, COCO, BDD-100k, and LIS, all of which include synthetic and real-world degradations.

Performance on Seen Datasets

On the MSRA10k dataset, which includes images with 15 different types of synthetic degradations, RobustSAM significantly outperforms other models.

On the LVIS dataset, which also includes synthetic degradations, RobustSAM shows superior performance

Zero-Shot Segmentation on Unseen Datasets

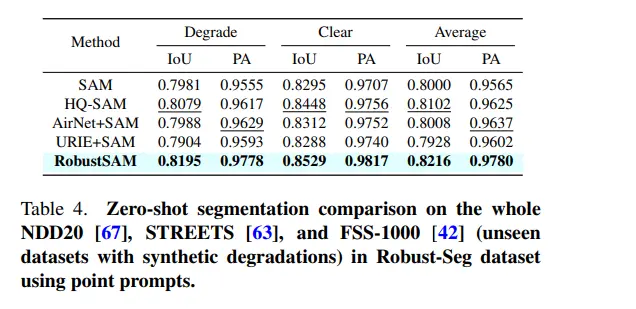

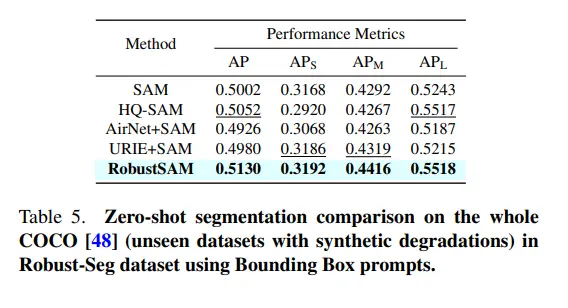

RobustSAM's performance is also remarkable in zero-shot segmentation tasks on unseen datasets like NDD20, STREETS, FSS-1000, and COCO, which contain synthetic degradations.

Real-world degradation tests on BDD-100k and LIS datasets further validate the robustness of RobustSAM

These results highlight RobustSAM’s ability to handle a wide range of challenging scenarios and its superiority over other models in both synthetic and real-world degradations.

RobustSAM: Applications in the Real World

The ability to accurately segment objects in images, even when faced with degradation, opens doors to exciting applications across various fields. Here's how RobustSAM's strengths can translate into real-world benefits:

Medical Imaging:

- Improved Medical Diagnosis: In medical imaging modalities like X-rays, CT scans, or mammograms, noise, and artifacts can be present due to limitations in imaging technology or patient factors. RobustSAM can potentially improve the segmentation of crucial structures like tumors or blood vessels, even in such degraded images. This can lead to earlier and more accurate diagnoses.

- Segmentation of Low-Dose Images: To minimize radiation exposure during medical scans, lower dose settings are often used. This can lead to increased noise in the images. RobustSAM's ability to handle noise can be valuable for segmenting anatomical structures in these low-dose scans, allowing for safer diagnostic procedures.

Surveillance:

- Object Detection in Challenging Conditions: Surveillance cameras often capture images with limitations like low light, fog, or rain. RobustSAM can potentially improve object detection and segmentation in such scenarios, allowing for better monitoring of public spaces or identification of suspicious activities.

- Traffic Monitoring with Degraded Data: Traffic monitoring systems often rely on cameras that can be affected by weather conditions or wear and tear. RobustSAM's ability to handle degraded images can be beneficial for tasks like vehicle segmentation and traffic flow analysis, even with less-than-ideal camera footage.

Autonomous Driving:

- Robust Perception in Adverse Conditions: Autonomous vehicles rely on accurate perception of their surroundings through sensors like cameras. However, rain, snow, or dust can significantly degrade image quality. RobustSAM can potentially improve the segmentation of objects like pedestrians, vehicles, and road lanes, even in such adverse conditions, leading to safer and more reliable autonomous driving.

- Handling Sensor Noise: Sensor readings in autonomous vehicles can be affected by noise. RobustSAM's ability to handle noise in images can potentially be extended to handle noise in sensor data, improving the overall perception capabilities of autonomous vehicles.

These are just a few examples, and the potential applications of RobustSAM can extend to any field where image segmentation is crucial but image quality might be compromised due to various factors. As research progresses, RobustSAM's capabilities can be further refined, leading to even broader real-world applications.

Conclusion

RobustSAM represents a significant advancement in the field of image segmentation, particularly in its ability to handle degraded images effectively. By augmenting the original Segment Anything Model (SAM) with innovative modules like the Anti-Degradation Output Token Generation (AOTG) and Anti-Degradation Mask Feature Generation (AMFG), RobustSAM addresses the challenges posed by noisy, blurred, and low-resolution images.

RobustSAM's ability to adapt to different types of degradation without significant computational overhead makes it a practical solution for real-world applications. The model's robustness also enhances downstream tasks like dehazing and deblurring, making it a versatile tool for comprehensive image analysis pipelines.

In conclusion, RobustSAM sets a new benchmark for segmentation models, combining robustness, adaptability, and high accuracy to tackle the challenges posed by degraded images.

Its innovative architecture and consistent performance across various evaluation metrics position it as a leading solution for image segmentation in challenging conditions, paving the way for further advancements in the field of computer vision.

FAQ

Q1: What is RobustSAM?

RobustSAM is an enhanced version of the Segment Anything Model (SAM) designed to perform image segmentation accurately even in the presence of degraded images. It incorporates advanced modules that address issues such as noise, blur, and low resolution.

Q2: How does RobustSAM handle degraded images?

RobustSAM employs specialized components like the Anti-Degradation Output Token Generation (AOTG) and Anti-Degradation Mask Feature Generation (AMFG) modules. These components generate robust features and tokens that ensure consistency and accuracy in segmentation despite image degradations.

Q3: What are the key architectural differences between SAM and RobustSAM?

The key differences include the addition of the AOTG and AMFG modules in RobustSAM. These modules help generate degradation-invariant features and robust output tokens. RobustSAM also incorporates mechanisms for maintaining token and mask feature consistency between clear and degraded images.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)