Exploring the Game-Changing Potential of Mistral 7B

Table of Contents

- Introduction

- Unveiling Mistral 7B: A Compact Powerhouse

- Mistral 7B's Architecture: Unlocking the Marvels

- Elevating Benchmarks: Mistral 7B's Outstanding Performance Across Diverse Domains

- Is Mistral 7B Pioneering Responsible AI Usage?

- The Accessibility of Mistral 7B and Its Potential

- Conclusion

- Frequently Asked Questions

Introduction

In the ever-evolving realm of artificial intelligence, the emergence of Mistral 7B from Mistral AI is a true game-changer. This compact yet immensely powerful model, equipped with 7 billion parameters, is poised to reshape the landscape of AI.

In this comprehensive blog, we embark on a journey deep into the heart of Mistral 7B, unveiling its unmatched capabilities, revolutionary architecture, and outstanding performance. Our aim is to shed light on the distinctive features that position Mistral 7B as a trailblazer in the AI world, marking a paradigm shift in what we thought possible with compact yet potent models.

Unveiling Mistral 7B: A Compact Powerhouse

Mistral 7B stands as a groundbreaking AI model created by Mistral AI, designed to revolutionize the field of artificial intelligence. With a mere 7 billion parameters, it challenges the traditional notion that larger models are always superior. Mistral 7B is not just remarkable for its performance but also for its commitment to responsible AI usage.

One of its standout features is its commitment to open accessibility. Through an Apache 2.0 licensing structure, Mistral 7B becomes available to a wide range of users, from educators to businesses, ushering in a new era of AI democratization.

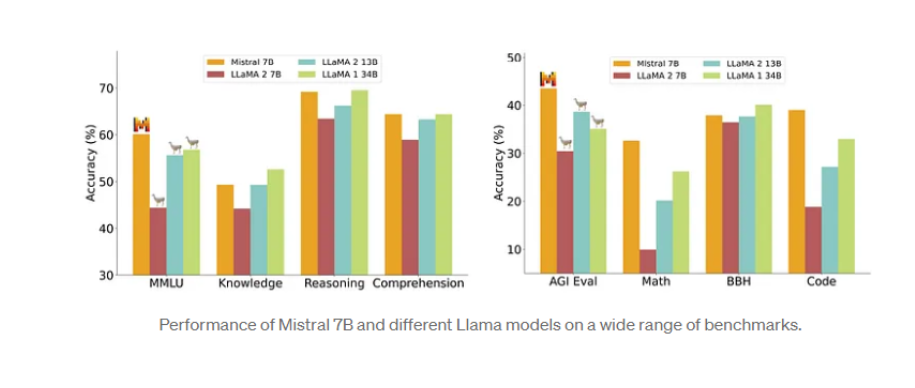

However, what truly sets Mistral 7B apart is its exceptional performance. Despite its relatively smaller size, it surpasses not only the 13 billion-parameter Llama 2 model but also outperforms the colossal Llama 27 billion and Llama 134 billion models, demonstrating the transformative potential of compact AI models.

Mistral 7B's Architecture: Unlocking the Marvels

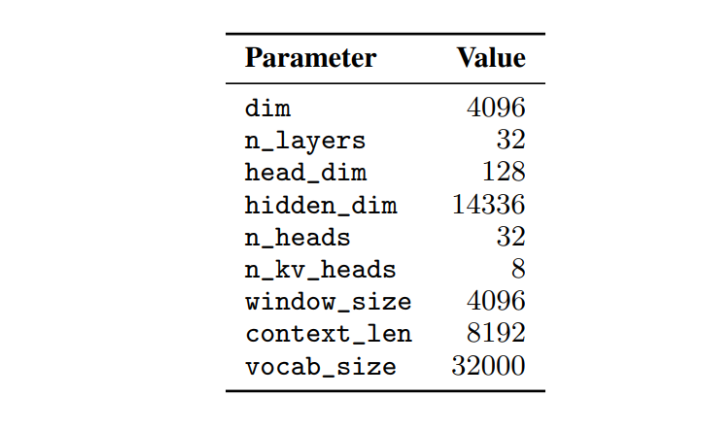

At the core of Mistral 7B lies a Transformer architecture, a fundamental component of modern language models. Nevertheless, it introduces several groundbreaking innovations that contribute to its exceptional performance. In comparison to Llama, it brings about some significant changes:

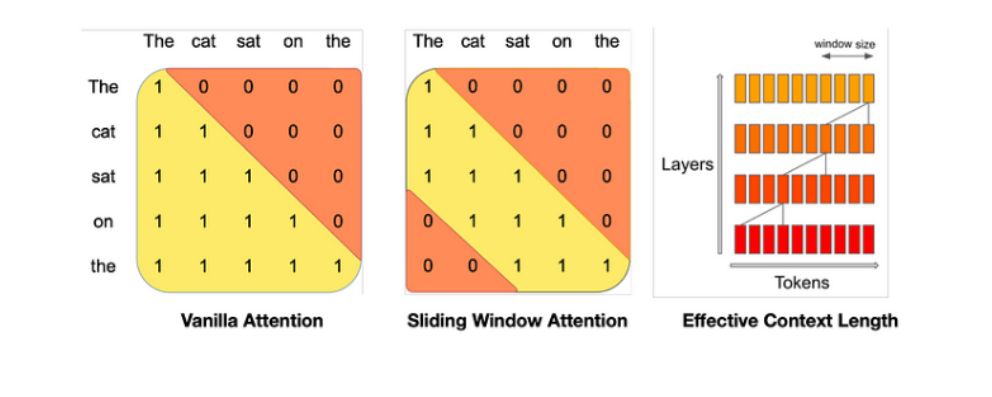

Sliding Window Attention (SWA)

Mistral 7B leverages Sliding Window Attention, a revolutionary approach that extends the model's attention span beyond a fixed window size. With a window size of 4096, it can theoretically attend to an astonishing 131K tokens.

This innovation, combined with modifications to FlashAttention and xFormers, results in a 2x speed enhancement over traditional attention mechanisms. SWA enables Mistral 7B to handle sequences of arbitrary length with remarkable efficiency.

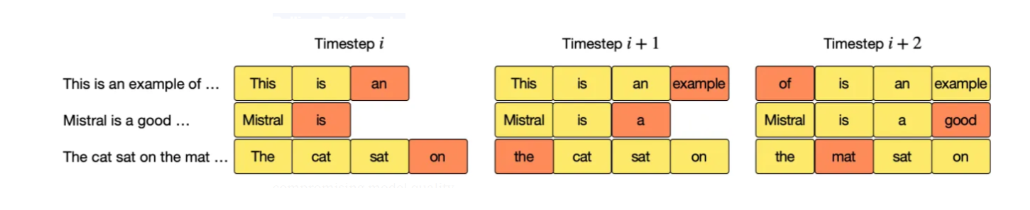

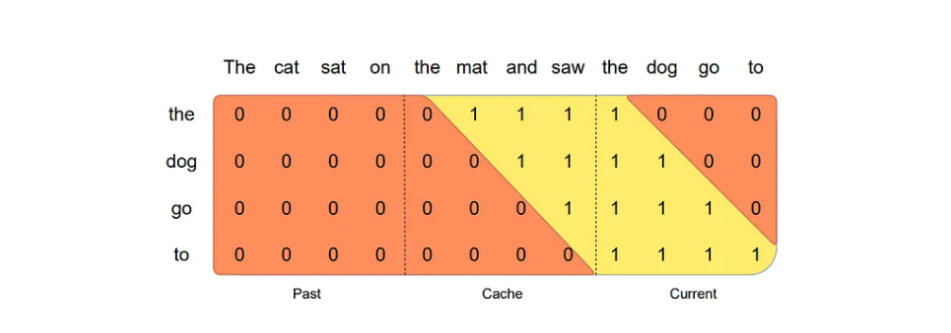

Rolling Buffer Cache

To optimize memory usage, Mistral 7B introduces a Rolling Buffer Cache. This cache has a fixed size, and as new data is added, older data is overwritten when the cache exceeds its capacity.

The outcome is an 8x reduction in cache memory usage without compromising the model's quality. This innovation ensures that Mistral 7B is not only efficient but also effective in memory management.

Pre-fill and Chunking

In sequence generation, Mistral 7B predicts tokens sequentially and, to boost efficiency, it pre-fills a (k, v) cache with the known prompt. For longer prompts, the model divides them into smaller segments using a chosen window size, filling the cache segment by segment.

This approach allows Mistral 7B to compute attention within the cache and over the current chunk, leading to more effective sequence generation.

Elevating Benchmarks: Mistral 7B's Outstanding Performance Across Diverse Domains

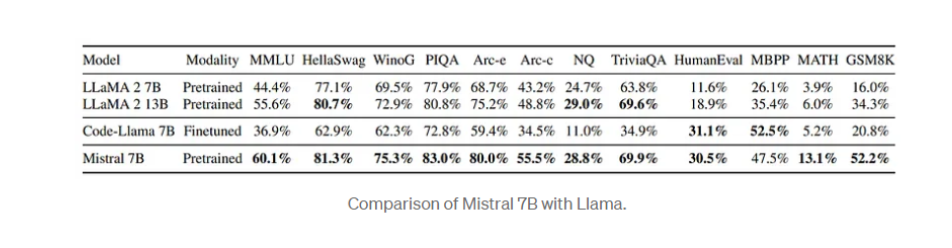

Mistral 7B's performance is truly remarkable, consistently surpassing its competitors in various benchmark assessments. In the realm of Large Language Models (LLMs), achieving top-notch performance is paramount.

Mistral 7B not only meets but exceeds this criterion by outperforming well-established competitors, including the renowned Llama 2 13B. However, it's not just about numbers.

The true essence of Mistral 7B lies in its intricate architecture and a host of remarkable features that set it apart in the AI world.

Mistral 7B's performance benchmarks have been nothing short of exceptional, particularly in its ability to outshine models like Llama 1 34B in critical areas such as code, mathematics, and reasoning.

This remarkable feat has garnered the model a dedicated following among developers and researchers who value its outstanding capabilities.

What's even more impressive is Mistral 7B's proficiency in approaching the performance level of CodeLlama 7B, especially in tasks related to coding.

This not only showcases its versatility but also underlines its potential to handle a wide array of code-related challenges effectively.

In essence, Mistral 7B's success isn't merely about numbers; it's about the intricacies of its architecture and its ability to consistently deliver top-tier performance in the areas that matter most to the AI community.

Delving into the Details: Mistral 7B's Excellence in Diverse Domains

Commonsense Reasoning

Mistral 7B emerges as a front-runner in commonsense reasoning. In 0-shot commonsense reasoning benchmarks such as Hellaswag, Winogrande, PIQA, and more, Mistral 7B consistently outperforms its competitors.

It showcases its prowess not only in reasoning but also in mathematics and code generation, proving its versatile capabilities.

World Knowledge

Mistral 7B's proficiency extends to the domain of world knowledge. 5-shot benchmarks like Natural Questions and TriviaQA demonstrate a deep understanding of diverse topics, providing accurate and insightful responses.

Reading Comprehension

In reading comprehension, Mistral 7B excels. In 0-shot reading comprehension benchmarks like BoolQ and QuAC, it not only comprehends the context but also generates coherent responses, highlighting its natural language understanding capabilities.

Mathematics and Code Generation

Mistral 7B is equally adept at handling complex mathematical problems and code generation tasks. Math benchmarks like GSM8K and MATH showcase its mathematical acumen.

Additionally, in code generation benchmarks such as Humaneval and MBPP, Mistral 7B proves its ability to generate accurate and meaningful code.

Instruction Following

Mistral 7B — Instruct, fine-tuned specifically for instruction following, stands out in both human and automated evaluations. It emphasizes its versatility by delivering top-notch performance, surpassing Llama 2 13B.

This means that it not only understands and interprets instructions effectively but also responds in a way that aligns with the desired outcome.

Mistral 7B's remarkable performance across these diverse domains underscores its ability to excel in a wide range of applications, making it a versatile and reliable choice for various AI tasks.

Is Mistral 7B Pioneering Responsible AI Usage?

Responsible AI usage is a paramount concern in today's world, and Mistral 7B has made it a cornerstone of its identity. Let's delve deeper into how Mistral 7B is setting a remarkable example in this crucial aspect.

Upholding Ethical AI

Mistral 7B places a strong emphasis on responsible AI usage, going beyond mere functionality to ensure that the content it generates meets high ethical standards. It achieves this by enforcing output constraints and engaging in detailed content moderation, ensuring that the content it produces adheres to predefined boundaries.

A system prompt acts as the guiding star for Mistral 7B. This prompt directs the model to provide answers that promote care, respect, and positivity. In a world where harmful, unethical, or prejudiced content can be an issue, this system prompt ensures that Mistral 7B's responses align with ethical values.

Declining Harmful Questions: One notable feat is Mistral 7B's refusal to answer harmful questions. In tests with unsafe prompts, it demonstrated a steadfast commitment to responsible AI usage by declining to respond to content that could be potentially harmful or unethical. This not only safeguards the integrity of the AI but also contributes to a safer online environment.

Content Moderation Excellence: Mistral 7B — Instruct takes its role as a content moderator seriously, boasting high precision and recall in classifying content. This makes it a valuable tool for various applications, including social media content moderation and brand monitoring, ensuring that inappropriate or harmful content is identified and managed.

In a world where AI is increasingly integrated into our daily lives, Mistral 7B's commitment to responsible AI usage sets a strong precedent. It showcases how AI models can be ethical, reliable, and contribute to a more positive digital ecosystem.

The Accessibility of Mistral 7B and Its Potential

Accessibility is a key factor in the adoption of AI models, and Mistral 7B has made significant strides in this regard. Let's explore how Mistral 7B is designed to be user-friendly and the potential it holds for the future.

Bridging the Accessibility Gap: Mistral 7B is breaking down barriers in AI accessibility. It is readily available through popular platforms like Ollama and Hugging Face. This means that individuals, businesses, and researchers have easy access to the power of Mistral 7B.

Whether you're an AI enthusiast or a professional looking to leverage its capabilities, Mistral 7B ensures that its benefits are within reach for a diverse user base.

Local Usage Made Simple: With Mistral 7B, running large language models locally becomes a straightforward endeavor. Its user-friendly design ensures that even those without extensive AI expertise can harness its potential. Asking questions, seeking recommendations, or engaging in conversational AI becomes as simple as typing a query.

This means that Mistral 7B can be a valuable resource for a wide array of applications, from natural language understanding to content generation.

Seamless Integration: Hugging Face, a renowned platform for AI models, has made Mistral 7B readily available, offering a convenient approach to incorporating the model into your projects. Detailed documentation and support ensure that the integration process is smooth and hassle-free.

This accessibility can catalyze innovation in a variety of fields, including chatbots, recommendation systems, and content generation.

Empowering the Future: Mistral 7B's accessibility not only benefits the present but also lays the groundwork for a future where AI is more integrated into everyday life. As more users, including developers, researchers, and businesses, get their hands on Mistral 7B, it's likely that we'll see a surge in creative applications and use cases that we can't even envision yet.

This democratization of AI models paves the way for groundbreaking advancements in technology and a more inclusive approach to AI research and development.

Mistral 7B is not just an AI model; it's a catalyst for a more accessible and innovative AI landscape. Its availability through user-friendly platforms and its potential to drive future developments underscore its importance in the world of artificial intelligence.

Conclusion

Mistral 7B stands as a groundbreaking AI model that defies the conventional wisdom that bigger is always better. Its exceptional performance, innovative architecture, and commitment to responsible AI usage make it a standout choice for various applications.

With a strong emphasis on accessibility, Mistral 7B is set to transform the AI landscape and empower individuals, businesses, and researchers to harness the full potential of AI in a more inclusive and responsible manner. The future of AI has arrived, and it's called Mistral 7B.

Frequently Asked Questions

1.What is Mistral 7B?

Mistral 7B is the latest achievement from the Mistral AI team, representing a cutting-edge language model known for its exceptional power relative to its size. Notably, it demonstrates comparable performance to CodeLlama 7B in coding tasks while maintaining proficiency in English-related assignments.

One of its key innovations is the implementation of Sliding Window Attention (SWA), a technique designed to efficiently process longer sequences at a reduced computational cost.

In essence, Mistral 7B stands out as an impressive and efficient language model with a focus on code comprehension and handling extended input sequences.

2. Is Mistral 7B better than llama 2?

Yes, Mistral 7B excels in terms of ease of fine-tuning for various tasks. As an illustration of its capabilities, a fine-tuned Mistral 7B model designed for chat surpasses the performance of Llama 2 13B in the same domain.

The Mistral team conducted a thorough comparison, re-evaluating all models, including different Llama variants, to ensure a fair and comprehensive assessment. Across a diverse set of benchmarks, Mistral 7B demonstrates superior performance when compared to various Llama models, indicating its effectiveness and versatility in handling a wide range of tasks.

3. Is mistral-7b-v0.1 a generative text model?

Yes, Mistral-7B-v0.1 is a pretrained generative text model with 7 billion parameters. This transformer model surpasses Llama 2 13B in performance across all benchmarks that were tested. In essence, Mistral-7B-v0.1 is a highly capable generative text model that outperforms its counterparts in various evaluation scenarios.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)