LLMs & Reasoning Models: How They Work and Are Trained!

LLMs reason by analyzing data, applying logic, and solving problems step by step. They are trained with structured datasets, prompting techniques, and reinforcement learning.

I remember using one of the LLMs while studying for an aptitude test. I was stuck on a tricky math problem.

I fed it to the AI, and bam! It gave me an answer instantly. Great, I thought. I used that answer on a practice test... and got it wrong.

Confused, I tried again, phrasing the question slightly differently. Same wrong answer, no explanation.

It was like a black box – I prompted, a wrong answer came out, and I had no idea why.

Frustrated, I realized the only way to get the AI to do it right was to break the problem down myself, step by step, and feed it each small piece.

"Calculate this first," "Now use that result to find this..." But wait, I thought. If I have to figure out all the steps myself, why am I even using the AI?

It was supposed to save my time, but this was actually slowing me down! It missed the whole point.

Then, things started to change. I recently tried a similar problem with a newer model, Google's Gemini 2.5 Pro. This time, it didn't just spit out a final number.

Instead, it laid out its plan: "Okay, first I need to figure out X, then I'll use that to calculate Y..." It showed me every single step of its thinking.

Interestingly, its first answer was still wrong!

But because it showed its work, I could immediately see where it messed up – a small calculation error in step 3.

I pointed it out.

The model acknowledged the mistake, corrected just that step, and reran the rest of its logic. This time, the final answer was perfect.

That experience really clicked for me. The big difference wasn't just getting the right answer eventually; it was the AI's ability to reason and show that reasoning.

Seeing the steps builds trust and makes the AI genuinely helpful, not just a magic (and often wrong) black box.

So, how did we get from those early frustrating models to AI that can show its work?

What does reasoning actually mean for these programs? How do they do it, and how are they trained? That's exactly what we're going to explore in this article.

What Does Reasoning Mean for an AI?

That whole experience showed me the big difference. The first AI I used was great at pattern matching.

It had seen millions of examples of text and could predict the next word really well, making it sound fluent. It could also retrieve facts it had memorised.

But when faced with a multi-step problem, it couldn't really think it through.

Gemini showed reasoning. For an AI, reasoning isn't about having feelings or consciousness like a person. It's about the ability to:

- Solve problems step-by-step: Like figuring out the first part of the math problem before moving to the second.

- Make logical connections (inference): Understanding that if A causes B, and B causes C, then A causes C.

- Follow rules (deduction): If all birds fly, and a robin is a bird, then a robin flies.

- Understand cause and effect: Figuring out why something might happen based on the information given.

It's like the AI is simulating a logical thought process, even though it works very differently from a human brain.

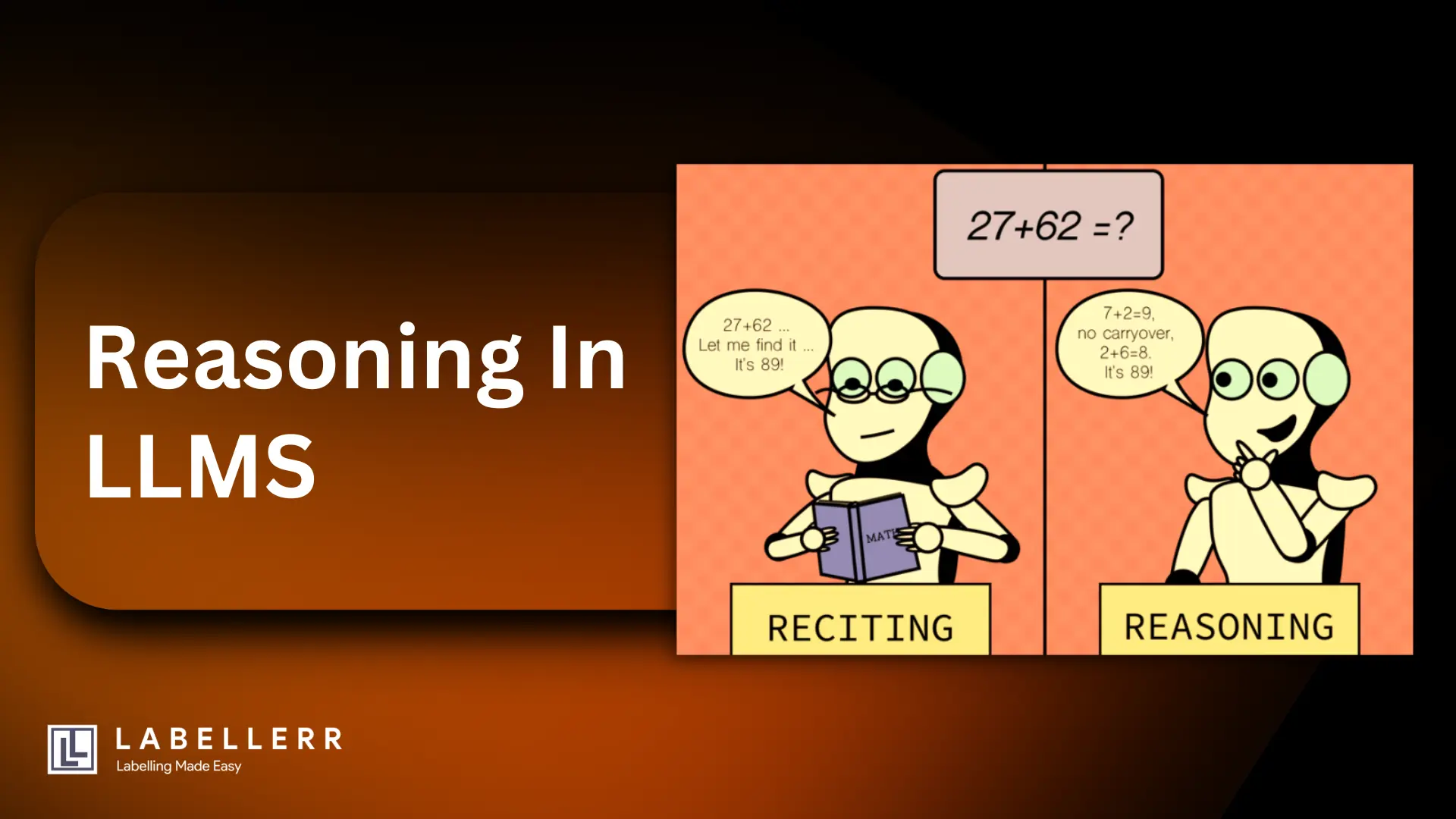

Thinking vs. Remembering

This is key, reasoning isn't just memorization or retrieval. The old AI could maybe pull up a formula (retrieval), but it couldn't use it correctly in multiple steps.

Reasoning involves taking information, whether it's from the prompt or its own knowledge, and manipulating or transforming it to figure out something new. It's processing, not just recalling.

Different Kinds of Thinking Problems

Just like people use different kinds of thinking, LLMs need different kinds of reasoning for different tasks:

- Math Reasoning: Solving number problems, like that word problem I had.

- Logical Reasoning (Deductive): Following "if-then" rules strictly.

- Finding Patterns (Inductive): Looking at examples and guessing a general rule (though AI can sometimes struggle here).

- Best Guesses (Abductive): Figuring out the most likely cause when something happens (like a doctor diagnosing symptoms).

- Common sense Reasoning: Understanding basic things about the world people just know (like "water is wet" or "don't hold the hot end of the pan").

- Cause-and-Effect Reasoning: Linking actions to results.

A good reasoning AI needs to switch between these types depending on the problem.

Why Does This Matter So Much?

My experience showed why this is a game-changer. When LLMs can reason:

- They can tackle much more complex tasks – writing code, helping with scientific research, planning projects.

- They become more reliable. Because I could see Gemini's steps, I could trust its final answer more (or fix it easily).

- They overcome the "black box" problem, leading to better collaboration between humans and AI. We can guide them, and they can show us how they got there.

Okay, let's continue our story, exploring how these AIs actually manage to reason and how they learn it.

How Does a LLM "Show Its Work"?

So, if LLMs aren't really thinking like us, how did Gemini manage to break down my math problem step-by-step? It turns out developers use clever techniques to guide the AI.

How LLMs Read and Write

Under the hood, these AIs (based on Transformer architecture) are amazing pattern models.

They use a mechanism called "attention" to see which words in the input are most important for predicting the next word.

While this helps them understand relationships in text, they are still fundamentally built just to predict the next piece of text.

Reasoning isn't something they automatically know how to do; it has to be guided or taught.

This guiding often happens through In-Context Learning. The AI pays close attention to the instructions and examples you give it in the prompt. A well-written prompt can steer the AI towards generating a step-by-step answer.

Chain-of-Thought (CoT)

This was a major breakthrough. Developers realized that if you simply ask the AI to "think step-by-step" or "show your reasoning," it often does a much better job on complex problems. It's like telling a student, "Show your work!"

- How it helps: It forces the AI to break the problem into smaller, manageable pieces.

Instead of jumping to a final (often wrong) answer, it generates the intermediate steps first. This sequential process often leads to a more accurate result. - Ways to do it: Sometimes you just add the instruction "Think step-by-step" (Zero-Shot CoT). Other times, you give the AI an example problem with the reasoning steps already written out (Few-Shot CoT).

Self-Consistency

A single reasoning path might still have errors (like in my Gemini example). Self-Consistency helps fix this.

- How it works: You run the same problem through the AI multiple times, perhaps slightly changing the prompt or just letting its randomness create different paths. You might get several different step-by-step reasoning chains.

- Getting the answer: You look at the final answers from all these attempts and pick the one that appears most often (majority voting).

- Why it helps: It makes the final answer less dependent on any single flawed step in one chain of thought.

Tree of Thoughts (ToT)

Sometimes, a problem isn't a straight line. You might need to explore different possibilities. Tree of Thoughts helps here.

- How it works: Instead of just one step-by-step chain, the AI considers multiple possible next steps at each point, like branches on a tree. It tries to evaluate which branches look most promising and explores those further.

- When it's useful: This is good for planning, solving puzzles, or tasks where you might need to backtrack or try different approaches.

Tools and Actions

Sometimes, pure text reasoning isn't enough.

1. ReAct Framework: This technique lets the AI mix Reasoning ("Thought") with Actions ("Act").

For example, the AI might think, "I need the current temperature," then perform the action of checking a weather website (using a tool or API), and then continue reasoning with that new information.

2. Program-Aided Models (PAL): For things like math, the AI can write a small piece of computer code (like Python) to do the calculation reliably, then incorporate the code's result back into its reasoning. This avoids calculation errors.

Other Ideas

Researchers are always finding new tricks:

1. Reflexion: Letting the AI review its own reasoning steps and try to correct mistakes.

2. Graph of Thoughts (GoT): Allowing even more complex connections between ideas than a simple tree.

3. Least-to-Most: Teaching the AI to solve easier sub-parts of a problem first before tackling the main goal.

All these techniques are ways developers cleverly guide the basic text-prediction ability of LLMs to produce outputs that look like, and often function as, logical reasoning.

How Do They Learn to Reason?

Okay, so we can guide LLMs with prompts, but how do they develop the underlying ability to follow those reasoning steps in the first place? It comes down to how they are trained.

Pre-training Data

It starts with the massive amount of text LLMs read during their initial pre-training. While they read everything, certain types of data are crucial for building a foundation for reasoning:

- Computer Code: Code is very logical and structured. Reading millions of lines of code helps the AI understand sequences, logic, and structure.

- Math and Science Texts: Textbooks, papers, and websites explaining math concepts or scientific processes often include step-by-step explanations.

- Educational Material: Anything designed to teach concepts often breaks things down logically.

- General Text: Even regular web text contains examples of arguments, explanations, and basic reasoning.

Fine-Tuning

After pre-training, developers fine-tune the models, giving them more specific lessons.

- Instruction Tuning: They train the AI on lots of examples where the instruction is something like, "Solve this math problem and explain your steps," and the desired output is a correct, step-by-step solution.

This teaches the AI the format of reasoning. - Training on Reasoning Problems: They use large sets of known reasoning problems (like math word problem datasets called GSM8K or MATH, or logic puzzle sets) as training examples, showing the AI both the problem and the correct reasoning path.

Reinforcement Learning (RL)

This is like training a dog with treats, but much more complex.

- Reinforcement Learning from Human Feedback (RLHF): Humans look at different AI responses (including reasoning steps) and rank which ones are better.

A separate "reward model" learns from these human rankings what constitutes good reasoning. Then, the main LLM is trained to act in ways that get a high score from this reward model. - Process vs. Outcome Supervision (This is Important!):

- Outcome Supervision: The AI only gets rewarded if the final answer is right. This is simpler but risky – the AI might learn flawed reasoning that sometimes luckily works.

- Process Supervision: The AI gets rewarded for each correct step in its reasoning chain. This is much harder because humans need to check every step, but it's far better for teaching the AI to reason reliably and logically. (Companies like OpenAI emphasize this).

Other Training Methods

- Self-Taught Reasoner (STaR): Some techniques involve letting the AI generate its own reasoning examples (even if initially flawed) and then using the correct ones to teach itself.

- Data Augmentation: Developers generate more diverse or complex reasoning problems to make the training more robust.

Benchmarks

Developers constantly test their models against standard sets of reasoning problems (benchmarks) to see how well they perform and where they need improvement. This drives the training process.

What's Next?

Researchers are exploring mixing LLMs with older symbolic logic systems or even having multiple AIs work together to solve problems during training.

So, training an LLM to reason involves giving it the right foundational knowledge through data, teaching it specific reasoning formats and problems, and using sophisticated feedback loops to reward logical, step-by-step "thinking."

Conclusion

So, back to my math problem adventure: The first AI failed because it mainly relied on pattern matching learned from basic text.

The second AI, Gemini, succeeded (after a small fix) because it could do more. Reasoning in LLMs means using techniques like Chain-of-Thought or Tree of Thoughts to simulate multi-step problem solving. These techniques are guided by clever prompting.

The AI learns this capability through extensive training, starting with reading vast amounts of logical text (like code and math) and then getting specific lessons using fine-tuning and reinforcement learning, ideally rewarding correct process, not just the final answer.

LLMs have made amazing progress in reasoning. They can solve complex problems that were impossible just a few years ago. However, as my experience showed, they are not perfect. Their reasoning can still be fragile, sometimes making silly mistakes or getting confused by unusual phrasing. It's still an active area of research.

Improving how LLMs reason – making it more reliable, more adaptable, and more transparent – is one of the biggest goals in AI right now. Getting better at this is key to unlocking the next wave of truly intelligent and helpful AI applications.

FAQs

What is reasoning in LLMs?

Reasoning in LLMs is the ability to analyze information, draw conclusions, and solve problems using logical steps rather than just predicting the next word.

How does reasoning work in LLMs?

LLMs use techniques like chain-of-thought prompting, retrieval-augmented generation, and fine-tuning on reasoning-heavy datasets to improve their logical capabilities.

How are LLMs trained for reasoning?

LLMs are trained on vast datasets with structured reasoning examples, reinforcement learning, and advanced architectures that help them break down complex problems step by step.

References

Simplify Your Data Annotation Workflow With Proven Strategies

.png)