LLM-Powered Image Caption Generation - Challenges, and Applications

Table of Contents

- Introduction

- What is Image Captioning Powered by LLMs and How It Works?

- Applications of LLM-based Image Captioning

- Challenges and Considerations in LLM-based Image Captioning

- Labellerr's Multimodal LLM Feature

- Conclusion

- FAQS

Introduction

Image captioning, an intersection of computer vision and natural language processing, has been revolutionized by advancements in Large Language Models (LLMs).

Traditionally, image captioning systems relied heavily on convolutional neural networks (CNNs) for image feature extraction and recurrent neural networks (RNNs) for generating descriptive text.

However, the introduction of LLMs, such as OpenAI's GPT-3 and GPT-4, has significantly enhanced the ability to generate more accurate, coherent, and contextually rich captions.

These models leverage vast amounts of data to understand and generate language with a high level of fluency and nuance, enabling them to produce captions that are not only descriptive but also contextually appropriate and creative.

Data Labeling and annotating diverse datasets - researchers, and developers can enhance the understanding and performance of LLMs, enabling them to generate accurate captions, comprehend multimodal content, and extract valuable insights from complex data sources.

The integration of LLMs into image captioning systems offers numerous advantages. For instance, LLMs can capture and convey subtle details and relationships within images, providing a deeper level of understanding and description.

This capability is particularly beneficial in applications requiring high accuracy and detail, such as in medical imaging, autonomous vehicles, and digital content creation. Moreover, the adaptability of LLMs allows for continuous learning and improvement, ensuring that captioning systems remain state-of-the-art as they evolve.

As a result, LLM-powered image captioning is not just a technological advancement but a transformative tool, reshaping how we interpret and interact with visual information.

What is Image Captioning Powered by LLMs and How It Works?

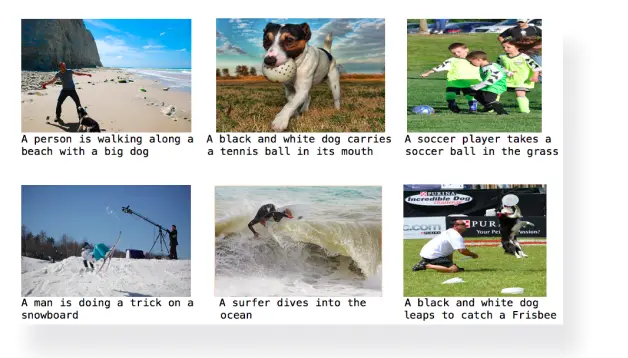

Image captioning is the process of generating textual descriptions for images. It combines computer vision and natural language processing (NLP) to interpret visual content and articulate it in a human-readable format.

When powered by Large Language Models (LLMs), image captioning leverages the extensive pretraining and language generation capabilities of these models to produce more coherent and contextually accurate descriptions.

Here’s a detailed look at how image captioning powered by LLMs works:

Components and Workflow

The LLM-based image captioning system typically comprises the following components:

1. Image Feature Extraction

The first step involves extracting meaningful features from the image. This is commonly achieved using Convolutional Neural Networks (CNNs) or more advanced models like Vision Transformers (ViTs).

CNNs: Models like ResNet, Inception, or VGG are pre-trained on large datasets like ImageNet. These networks process the image through multiple layers, capturing hierarchical features ranging from low-level edges to high-level object representations.

Vision Transformers (ViTs): ViTs split the image into patches and process these patches through transformer architectures, capturing relationships between patches and offering a robust representation of the image.

Output: A high-dimensional feature vector or a set of feature maps that represent the image.

2. Feature Encoding

The extracted image features are then encoded into a format suitable for the language model. This involves:

Flattening and Transformation: The feature maps are flattened into a vector. If using a transformer-based model, the features are embedded into tokens.

Attention Mechanisms: In some architectures, attention mechanisms are applied to focus on specific parts of the image that are more relevant to the captioning task.

Output: Encoded image features that can be fed into the language model.

3. Caption Generation Using LLM

Here, the encoded image features are input to a large language model, such as GPT-4, which generates the image captions. The process involves:

Input Preparation: The encoded image features are combined with textual tokens if the model expects a mixed input. This combination might involve concatenating the image vector with a start token.

Sequence Modeling: The LLM, which is typically based on transformer architecture, processes the input sequence. Transformers consist of layers of self-attention and feed-forward networks, allowing the model to capture dependencies between different parts of the input sequence (both image and text tokens).

Text Generation: The model generates text tokens sequentially. Each token is generated based on the previously generated tokens and the encoded image features. Beam search, greedy decoding, or other decoding strategies might be used to improve the quality of the generated text.

Output: A sequence of text tokens that form the caption.

Applications of LLM-based Image Captioning

Large Language Model (LLM)-based image captioning combines advanced natural language processing with computer vision to generate accurate and contextually relevant descriptions of images. This technology has wide-ranging applications across various domains. Here are five key applications:

1. Content Management and Search Optimization

Content Management:

- Digital Asset Management : Organizations use DAM systems to store, organize, and retrieve digital content. LLM-based image captioning automates the tagging process, ensuring that images are accurately described and easily searchable. This enhances the efficiency of managing large volumes of visual content.

- Metadata Generation: Automatic captioning generates rich metadata for images, which is crucial for indexing and categorizing content. This metadata can include details about objects, actions, settings, and even emotional tone, providing a comprehensive understanding of the image.

Search Optimization:

- Enhanced Searchability: By creating detailed and accurate descriptions, LLM-based captioning improves the precision and relevance of search results. Users can find specific images more quickly, even with complex search queries.

- SEO Benefits: For websites, especially those rich in visual content, image captions can improve search engine optimization (SEO). Well-described images can be indexed more effectively by search engines, driving organic traffic to the site.

2. Social Media and Content Creation

Social Media:

- Automated Captioning: Social media platforms can use LLM-based captioning to automatically generate engaging and relevant captions for user-uploaded images, enhancing the user experience and increasing engagement.

- Accessibility: By providing text descriptions for images, social media platforms become more accessible to visually impaired users, ensuring inclusivity and compliance with accessibility standards.

Content Creation:

- Content Personalization: Creators can use image captioning to generate personalized content at scale. For instance, travel bloggers can automatically generate descriptions for their photos, saving time and maintaining consistency.

- Storytelling Enhancement: Automated captions can serve as a foundation for more detailed narratives, helping creators to craft compelling stories around their visual content.

3. E-commerce and Product Descriptions

Product Descriptions:

- Automated Listings: E-commerce platforms can leverage LLM-based image captioning to automatically generate product descriptions from images. This streamlines the process of listing new products, reducing the time and effort required.

- Consistency and Accuracy: Automated captioning ensures that product descriptions are consistent and accurate, which is crucial for maintaining brand integrity and providing clear information to customers.

Customer Experience:

- Improved Search Functionality: Detailed image captions enhance the platform’s search capabilities, allowing customers to find products based on visual characteristics and descriptions.

- Personalized Recommendations: By analyzing the content of product images, e-commerce platforms can provide more accurate and personalized product recommendations to users.

4. Healthcare and Medical Imaging

Medical Imaging:

- Diagnosis Assistance: LLM-based image captioning can assist healthcare professionals by providing preliminary descriptions of medical images, such as X-rays, MRIs, and CT scans. These descriptions can highlight potential areas of concern for further review by a specialist.

- Documentation: Automatic captioning of medical images ensures that detailed records are kept, aiding in patient history documentation and case studies.

Accessibility:

- Training and Education: Medical students and professionals can benefit from annotated medical images, enhancing their learning and understanding of complex conditions and treatments.

- Patient Communication: Providing clear and understandable descriptions of medical images can help doctors communicate more effectively with patients, improving patient understanding and satisfaction.

5. Surveillance and Security

Surveillance:

- Automated Monitoring: LLM-based image captioning can be used in surveillance systems to automatically generate descriptions of monitored scenes. This can help in identifying unusual activities or security breaches in real time.

- Event Logging: Descriptions generated from surveillance footage can be logged to create detailed records of events, facilitating easier review and analysis.

Security:

- Intrusion Detection: Advanced captioning systems can detect and describe suspicious activities, aiding security personnel in responding quickly and effectively.

- Law Enforcement: Detailed descriptions from surveillance footage can assist law enforcement agencies in investigations, providing clear and concise reports that can be used as evidence.

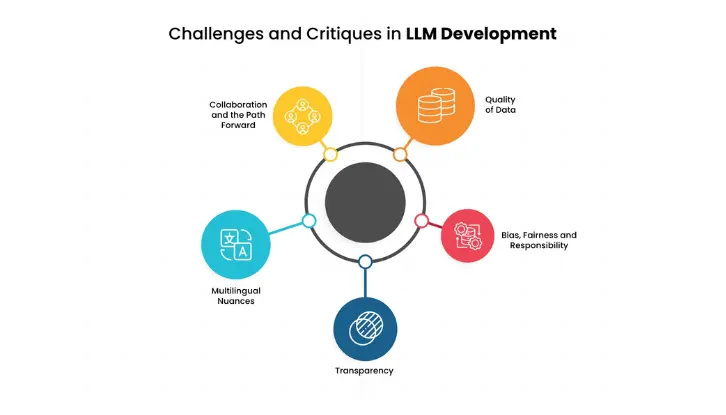

Challenges and Considerations in LLM-based Image Captioning

The implementation of Large Language Model (LLM)--based image captioning involves several significant challenges and considerations. These can affect the performance, fairness, and overall feasibility of the technology. Here are three key challenges:

1. LLM Biases and Caption Fairness

Biases in LLMs:

- Training Data Bias: LLMs are trained on large datasets that often contain biases reflecting societal prejudices. This can lead to biased image captions, which may perpetuate stereotypes or unfairly represent certain groups. For example, an image of a woman in a professional setting might be captioned in a manner that emphasizes her appearance rather than her professional role.

- Representation Bias: If the training data disproportionately represents certain groups or scenarios, the LLM may produce captions that reflect these imbalances. This can result in the underrepresentation or misrepresentation of minority groups and contribute to biased outcomes in applications such as social media or e-commerce.

- Fairness in Descriptions: Ensuring that captions are fair and unbiased is crucial, especially in contexts where descriptions might influence perceptions or decisions. For example, biased captions in surveillance could lead to unfair profiling.

- Mitigating Bias: Addressing bias requires diverse and balanced training datasets, along with continuous monitoring and adjustment of the model. Techniques such as bias correction algorithms and fairness audits can be employed to identify and mitigate biases.

2. Explainability and Interpretability of LLMs

Explainability:

- Black-box Nature: LLMs, particularly those using deep learning techniques, are often considered black-box models because their decision-making processes are not easily understandable. This lack of transparency can be problematic, especially in critical applications like healthcare or security.

- User Trust: For users to trust LLM-based systems, they need to understand how the models arrive at their conclusions. This is particularly important in fields where decisions based on image captions have significant consequences, such as medical diagnostics or legal investigations.

- Model Interpretation Tools: Efforts are being made to develop tools and techniques that can help interpret the decisions of LLMs. These include methods like attention visualization, which shows which parts of an image the model focused on when generating a caption.

- Regulatory Compliance: In some industries, regulatory standards require the explainability of AI systems. For instance, the General Data Protection Regulation (GDPR) in the European Union mandates that automated decision-making processes be explainable to users.

3. Computational Cost and Resource Requirements

Computational Cost:

- Training Costs: Training LLMs is computationally intensive and expensive. It requires significant processing power and large amounts of data, often necessitating the use of powerful GPUs or specialized hardware like TPUs (Tensor Processing Units). This can be a barrier for smaller organizations or those with limited resources.

- Energy Consumption: The energy consumption associated with training large models is substantial, raising concerns about the environmental impact. Sustainable AI practices are increasingly important as the demand for powerful AI systems grows.

- Infrastructure Needs: Deploying LLM-based image captioning systems at scale requires robust infrastructure, including high-performance servers and storage solutions to handle the data and computational load. This can be a significant investment.

- Operational Costs: Beyond initial training, maintaining and updating these models requires ongoing computational resources. Inference, the process of using the model to generate captions, also demands considerable computational power, particularly for real-time applications.

Labellerr's Multimodal LLM Feature

Labellerr, a leading data labeling and annotation platform, has just introduced a revolutionary feature that leverages the power of Large Language Models (LLMs). This innovative addition empowers users with AI-powered multimodal caption generation and question-answering capabilities.

What is the Multimodal LLM Feature?

Imagine a single platform that seamlessly handles various data types – images, videos, audio files, and even text documents. Labellerr's new feature integrates a powerful LLM that operates in the background, offering users a handful of functionalities:

Automatic Caption Generation: Upload any image, video, or audio file, and the LLM will generate captions that describe the content in detail. This can significantly improve workflow efficiency, especially when dealing with large datasets.

Multilingual Support: The LLM can potentially generate captions in multiple languages, catering to a global audience and broadening the reach of your content.

Text Analysis and Summarization: Upload text documents and receive summaries that capture the key points. This is a valuable tool for researchers, students, and anyone working with large amounts of textual data.

AI-powered Question Answering: Have a question about the content in your file? Ask away! The LLM can analyze the data and provide informative answers, fostering a deeper understanding of your content.

Benefits for Users:

This multimodal LLM feature offers a multitude of benefits for Labellerr users:

Enhanced Efficiency: Automatic caption generation and question answering significantly reduce manual work, saving valuable time and resources.

Improved Data Understanding: AI-powered insights help users gain a deeper grasp of the content within their data, leading to more informed decisions.

Accessibility and Inclusivity: Automatic caption generation in multiple languages promotes accessibility and caters to a wider audience.

Streamlined Workflows: The ability to handle various data types within a single platform simplifies workflows and eliminates the need for multiple tools.

The Future of Multimodal LLMs in Labellerr

Labellerr's innovative LLM feature represents a significant leap forward in data annotation and analysis. As LLM technology continues to evolve, we can expect even more advanced capabilities, such as:

Fine-tuning for Specific Domains: LLMs can be customized to understand the nuances of specific domains, like healthcare or finance, leading to more accurate and relevant insights.

Explainable AI Integration: Understanding how the LLM arrives at its answers will foster trust and transparency in the AI-generated insights.

With the launch of this feature, Labellerr empowers users to harness the power of AI for richer data understanding and streamlined workflows. The future of multimodal LLMs is bright, and Labellerr is at the forefront of this exciting revolution.

Conclusion

In conclusion, enhancing LLM-based image captioning involves a multifaceted approach that incorporates various advanced techniques.

By integrating these techniques into a unified framework, LLM-based image captioning systems can generate captions that closely resemble human-generated in terms of quality, relevance, and diversity.

As AI continues to advance, leveraging these methods will be pivotal in unlocking the full potential of image captioning technology, enabling applications across diverse domains, and driving innovation in computer vision and natural language processing.

FAQS

1) What is LLM-based image captioning, and how does it work?

LLM-based image captioning is a technique that leverages Large Language Models (LLMs) to generate descriptive captions for images. It combines visual feature extraction with natural language generation, where the model processes the image features and generates textual descriptions that describe the content of the image.

2) What are some methods to enhance LLM-based image captioning?

Some methods to enhance LLM-based image captioning include supervised fine-tuning, reinforcement learning with human feedback (RLHF), self-supervised learning, attention mechanisms, multi-modal fusion, adversarial training, diverse beam search, and knowledge distillation.

3) How does supervised fine-tuning improve LLM-based image captioning?

Supervised fine-tuning involves fine-tuning the parameters of a pre-trained LLM using labeled image-caption pairs. By learning from human-generated captions, the model can generate captions that are more aligned with human preferences and linguistic conventions.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)