Google IO 2024: What's New & Amazing

Every year, the tech world eagerly anticipates Google I/O, a marquee event hosted by Google. This developer conference, typically held in Mountain View, California, serves as a launchpad for Google's most groundbreaking announcements.

Table of Contents

- Google Keynote 2024: A Generative Leap Forward

- Google I/O 2024: A Year of Generative Power and Personalized AI

- Gemini 1.5: A Powerful and Efficient AI Model

- Project Astra: A Glimpse into the Future of AI Assistants

- Trillium: Ushering in a New Era of AI Performance at Google I/O 2024

- Unveiling the Magic: A Deep Dive into Imagen 3 at Google I/O 2024

- Unveiling the Magic of Moving Pictures: A Deep Dive into Veo at Google I/O 2024

- AI Overviews: A Pillar of the Generative Search Experience

- PaliGemma: Unveiling a Powerful Open-Source Vision Language Model

- Conclusion

- FAQ

- References

It's a vibrant platform where the company unveils its latest creations, sheds light on upcoming features, and empowers developers with the tools to shape the technological landscape.

From advancements in Artificial Intelligence (AI) to updates on popular products like Android and Search, Google I/O provides a glimpse into the future, leaving attendees and the broader tech community abuzz with excitement.

Beyond the product reveals, Google I/O fosters a dynamic environment for learning and collaboration. Workshops, insightful talks by Google experts, and sessions brimming with best practices equip developers with the knowledge and skills to leverage these cutting-edge tools.

It's a melting pot of innovation, where developers connect, share ideas, and forge connections that fuel the creation of groundbreaking applications and experiences. Whether you're a seasoned developer or simply curious about the future of technology, Google I/O offers a wealth of knowledge and inspiration.

Google Keynote 2024: A Generative Leap Forward

Sundar Pichai, CEO of Google, took the stage at Google I/O 2024 and kicked off the event with a keynote address brimming with groundbreaking announcements.

The focus this year was undeniably Generative AI, with Google showcasing significant advancements that promise to reshape the way we interact with information and technology.

You can refer to this video for a more detailed view of Keynote:

The centerpiece of the keynote was the unveiling of Gemini 1.5 Pro, the next generation of Google's powerful Generative AI model. This upgraded version boasts an enhanced understanding of information, drawing insights from a wider range of sources including emails, photos, and even videos.

Imagine asking a complex question like "Why won't this stay in place" and receiving not just an answer, but a comprehensive explanation with potential solutions – that's the power of Gemini 1.5 Pro.

Google also introduced Gemini 1.5 Flash, a lighter-weight version designed for speed and cost-efficiency, making AI capabilities more accessible.

The keynote address wasn't solely dedicated to search. However, a significant portion was devoted to unveiling Veo, a groundbreaking new model in generative video.

Imagine creating high-quality videos based on simple text prompts, images, or even existing video footage. Veo's capabilities open doors for a new era of creative video expression.

Beyond these marquee announcements, the keynote also highlighted the arrival of the 6th generation of TPUs (Tensor Processing Units) – the workhorses that power Google's AI advancements.

This new iteration, christened Trillium, boasts a significant leap in computational performance, paving the way for even more groundbreaking research and development in the field of AI.

The spirit of the Google Keynote 2024 wasn't just about unveiling new technology, but about demonstrating its real-world applications. The address showcased how Gemini integration with Search empowers users with AI-powered summaries for complex questions, all within seconds.

It delved into how Workspace would now leverage Gemini, allowing users to ask questions about emails and documents, or even compare bids from vendors with the help of AI.

The keynote concluded with a glimpse into the future of personalized AI.

Google introduced Gems, a novel feature within Gemini that allows users to create customized AI experts on specific subjects.

Imagine having your own AI personal trainer or a fashion stylist powered by AI Gems opens the door to a world of personalized AI assistants tailored to your unique needs.

Google I/O 2024: A Year of Generative Power and Personalized AI

The curtains closed on Google I/O 2024, leaving a trail of awe and excitement in their wake. This year's iteration proved to be a watershed moment, particularly in the realm of Generative AI.

Google unveiled groundbreaking advancements in this field, with the Gemini 1.5 Pro model taking center stage. This next-generation AI boasts a comprehensive understanding of information, culled from diverse sources like emails, photos, and videos. It empowers users with the ability to tackle intricate questions and receive insightful answers, propelling search experiences to new heights.

Gemini 1.5: A Powerful and Efficient AI Model

Google I/O 2024 introduced Gemini 1.5, the next generation of their powerful AI model. This iteration comes in two distinct flavors, each catering to specific needs: Gemini 1.5 Pro and Gemini 1.5 Flash.

Gemini 1.5 Pro

Superior Performance: This version is designed for exceptional performance across a wide range of tasks. Whether you're tackling complex questions, generating creative text formats, or translating languages, Gemini 1.5 Pro delivers exceptional results.

Extraordinary Context: One of the key strengths of 1.5 Pro is its extensive context window. In the public preview available on Google AI Studio and Vertex AI, it boasts a remarkable 1 million token context window. This signifies that the model can consider a vast amount of information, equivalent to roughly 1 million words, when processing your requests.

Imagine asking a question that requires understanding the nuances of a lengthy document – 1.5 Pro can handle it with ease. For developers who sign up for the waitlist, an even more impressive 2 million token context window is available, allowing for processing even more information and generating even more comprehensive responses.

Gemini 1.5 Flash

Lightweight Powerhouse: While 1.5 Pro prioritizes comprehensive understanding, 1.5 Flash excels in speed and efficiency. This lightweight model is designed for applications where rapid response times are crucial.

It's the fastest Gemini model served via the API, making it ideal for tasks that require real-time interaction or integration into fast-paced workflows.

The Right Tool for the Job

The choice between 1.5 Pro and 1.5 Flash depends on your specific needs. If you require the absolute best performance and can handle slightly longer response times, 1.5 Pro is the way to go. If speed and real-time interaction are your top priorities, 1.5 Flash is the ideal choice.

Project Astra: A Glimpse into the Future of AI Assistants

While Project Astra wasn't one of the central themes at Google I/O 2024, there were mentions of it during the conference and in some follow-up blog posts. Here's a detailed breakdown of the available information.

Project Astra: A Glimpse into the Future of AI Assistants

Project Astra is Google's vision for the next generation of AI assistants. It aims to move beyond the limitations of current voice-based assistants by incorporating sight and context awareness. Here's what we know so far:

Multimodal Input: Project Astra breaks away from the traditional voice-only interaction model. It can leverage both visual and audio information to understand your needs and provide more comprehensive assistance.

Imagine showing your phone a stain on your shirt and asking "How do I get this out?" Project Astra, by analyzing the image and your question, could provide tailored cleaning instructions.

Contextual Awareness: Project Astra goes beyond simply responding to your commands. It can understand the context of your situation to deliver more relevant assistance.

For instance, if you're looking at a recipe on your phone and ask "Where can I buy these ingredients?" Project Astra, considering your location and the recipe, could suggest nearby stores that carry the items you need.

Real-World Applications: The demonstrations showcased at Google I/O hinted at Project Astra's potential applications. One example involved using the camera to identify lost glasses and their location within your home.

Another scenario depicted using Project Astra for real-time code debugging, where the assistant could analyze code on your screen and offer solutions based on visual cues and spoken questions.

Limited Information: While details about Project Astra are intriguing, specific information on its release date and functionalities remain scarce. It's likely still in the early stages of development, and Google hasn't provided a timeline for its public availability.

Trillium: Ushering in a New Era of AI Performance at Google I/O 2024

Google I/O 2024 wasn't just about advancements in Generative AI; it also unveiled a significant leap forward in the hardware that powers these models – the Trillium Tensor Processing Unit (TPU). Here's a detailed breakdown of what was announced about Trillium.

The 6th Generation of TPUs: A Quantum Leap in Performance

Trillium marks the arrival of the 6th generation of Google's custom-designed AI accelerators – TPUs. Compared to its predecessor, the TPU v5e, Trillium boasts a staggering:

4.7x Increase in Peak Compute Performance: This translates to significantly faster processing times for AI models, enabling researchers and developers to train complex models in a fraction of the time compared to previous generations.

Over 67% Improvement in Energy Efficiency: Trillium accomplishes this feat while delivering a substantial performance boost, making it a more sustainable option for large-scale AI development.

Powering the Future of AI Research and Development

The enhanced capabilities of Trillium will undoubtedly have a significant impact on the future of AI research and development. Here are some potential implications:

Faster Training of Complex AI Models: With Trillium's processing power, researchers and developers can train intricate AI models that were previously considered computationally infeasible. This opens doors to groundbreaking advancements in various fields such as natural language processing, computer vision, and robotics.

Exploration of Larger and More Powerful Models: The improved efficiency of Trillium allows for the creation of larger and more intricate AI models with a smaller environmental footprint. These models have the potential to achieve even higher levels of accuracy and performance on various tasks.

Democratization of AI Development: While Trillium will likely be primarily used in Google's data centers initially, its advancements could eventually trickle down to the broader AI community. This could lead to more accessible and powerful AI development tools in the future.

Beyond the Numbers: Beyond raw performance, Google emphasized Trillium's role in responsible AI development. They are actively using Trillium to:

Develop More Secure AI Models: Trillium's capabilities are being leveraged to improve AI model robustness and identify potential security vulnerabilities before deployment.

Advance Fairness in AI: Google is utilizing Trillium to explore techniques for mitigating bias in AI models, ensuring they are fair and unbiased in their decision-making processes.

Unveiling the Magic: A Deep Dive into Imagen 3 at Google I/O 2024

Google I/O 2024 witnessed the much-anticipated unveiling of Imagen 3, Google's latest and most powerful image generation model to date. This announcement marked a significant leap forward in the realm of AI-powered image creation, promising unparalleled realism and detail. Here's a closer look at what was announced about Imagen 3.

Generating Breathtaking Images

Imagen 3 surpasses its predecessors in its ability to produce incredibly realistic images. It excels at:

Understanding Your Intent: This model has been fine-tuned to comprehend the nuances of your prompts. It goes beyond simply generating images based on keywords; it captures the underlying meaning and artistic vision you describe.

Incorporating Intricate Details: Gone are the days of generic AI-generated images. Imagen 3 incorporates even the most intricate details from your prompts, resulting in remarkably realistic and detailed creations. Imagine describing a fantastical landscape with bioluminescent flowers and cascading waterfalls – Imagen 3 can bring your vision to life.

Photorealism at its Finest: A major challenge with prior image generation models was the presence of visual artifacts that diminished the realism of the images. Imagen 3 tackles this issue head-on, producing images that are indistinguishable from real photographs in many cases.

Text Rendering Mastery: Another hurdle for AI image generation has been the proper rendering of text within images. Imagen 3 surpasses its predecessors in this area, generating clear and legible text that seamlessly blends into the image itself.

Availability and accessibility

While not immediately available to everyone, Imagen 3's rollout has begun in a few key ways:

Trusted Testers in ImageFX: A select group of users within Google's ImageFX platform were granted early access to Imagen 3 as Trusted Testers. This allowed Google to gather valuable feedback on the model's performance and user experience.

Waitlist for Public Access: Recognizing the excitement surrounding Imagen 3, Google opened a waitlist for public access. Users can sign up to be notified when the model becomes more widely available.

Vertex AI Integration (Summer 2024): A significant announcement for developers was the planned integration of Imagen 3 with Vertex AI in the summer of 2024. This empowers developers to leverage Imagen 3's capabilities within their own applications and projects.

A Note on Responsible AI

While Imagen 3's capabilities are impressive, Google emphasized its commitment to responsible AI development during the announcement. They are actively working on measures to:

Prevent Misuse of Imagen 3: Google is taking steps to prevent the generation of harmful or misleading content through the model

Promote Transparency: They are committed to providing users with clear information about how Imagen 3 works and the limitations of the technology.

Unveiling the Magic of Moving Pictures: A Deep Dive into Veo at Google I/O 2024

Google I/O 2024 wasn't just about advancements in search or static images; it also witnessed the unveiling of Veo, a groundbreaking new model for generating high-quality videos based on text prompts. This announcement marked a significant leap forward in the realm of video creation, empowering users to bring their ideas to life with unprecedented ease. Here's a closer look at what was announced about Veo.

From Text to Breathtaking Videos

Veo shatters the limitations of traditional video creation by allowing you to generate high-definition videos based on simple text descriptions or prompts. Imagine conjuring up a concept for a marketing campaign or a music video and having Veo translate your vision into a compelling moving picture.

Key Features and Capabilities

1080p Resolution and Beyond a Minute Long: Veo isn't just about creating quick snippets; it boasts the ability to generate high-quality videos exceeding a minute in length, all in stunning 1080p resolution. This allows for the creation of longer narratives and more complex video projects.

A World of Cinematic Styles: Unleash your inner director! Veo offers a diverse range of cinematic and visual styles at your disposal. Want to create a dynamic action sequence or a whimsical animated explainer video? Veo provides the tools to bring your vision to life with various aesthetic options.

Early Collaborations Showcase Potential: Google didn't just announce Veo; it showcased its potential through collaborations with renowned filmmakers like Donald Glover. These early projects hint at the immense creative possibilities Veo unlocks for artists and storytellers.

Beyond the Initial Hype: Where Veo Might Take Us

Veo's introduction promises to revolutionize video creation across various domains, including:

Democratizing Video Content Creation: Veo empowers those without extensive video editing experience to craft high-quality videos. This opens doors for businesses, social media creators, and even educators to easily create engaging video content.

Enhanced Prototyping in Various Fields: Imagine architects using Veo to generate realistic video walkthroughs of building designs or product designers creating quick video prototypes to showcase their ideas. Veo's capabilities can streamline various creative and design workflows.

Personalizing the Video Experience: Veo's potential extends beyond professional applications. Imagine generating personalized video greetings or even crafting short video stories based on your vacation photos. The possibilities for personal video creation are vast.

Integration with Existing Platforms (Future Plans):

While details were scarce, Google hinted at future plans to integrate Veo with existing platforms like YouTube Shorts. This could allow creators to leverage Veo's capabilities within familiar workflows, further streamlining video content creation.

A Commitment to Responsible Development

Google acknowledged the potential for misuse of video generation technology. They emphasized their commitment to developing Veo responsibly, focusing on measures like:

Preventing Misinformation and Bias: Techniques are being implemented to ensure Veo doesn't generate misleading or biased content.

Transparency and User Education: Google plans to provide clear information about Veo's capabilities and limitations, educating users about responsible video creation practices.

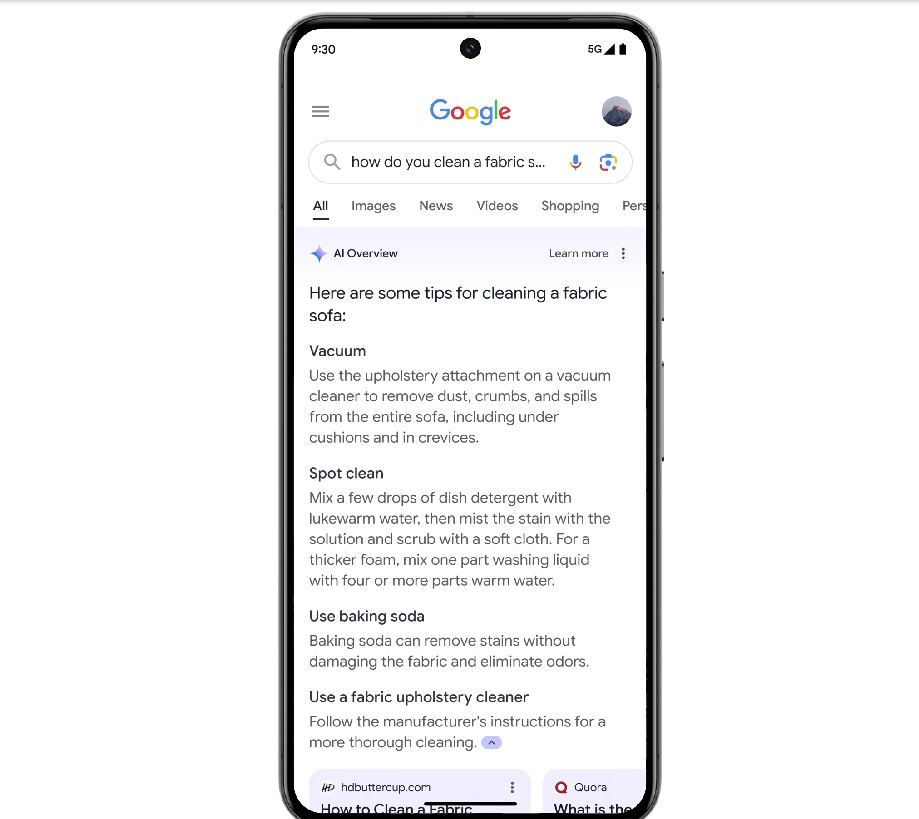

AI Overviews: A Pillar of the Generative Search Experience

AI overviews are a core feature of Google's integration of generative AI models, like Gemini, into Search. While details might not have been presented in a single session, the concept was likely woven throughout various talks and demonstrations. Here's how AI overviews were likely presented.

Understanding User Intent: A significant focus at Google I/O 2024 was on leveraging AI to understand user search intent more comprehensively. AI overviews directly benefit from this advancement. By analyzing your search query and context, these overviews provide summaries and insights tailored to your specific needs.

A More Organized Search Experience: AI overviews were likely positioned as a solution to information overload. Imagine searching for a complex topic; AI overviews would categorize results under relevant headings generated by AI, making it easier to navigate and understand the information presented.

Multiple Sources, Consolidated View: AI overviews wouldn't just present summaries; they'd likely draw insights from various sources beyond traditional web pages. Imagine searching for a historical figure; the AI overview might consolidate information from academic journals, news articles, and even documentaries to provide a comprehensive perspective.

Overall Impact of AI Overviews on Search

The introduction of AI overviews signifies a significant shift in the way we interact with search engines. Here are some potential benefits:

- Enhanced Efficiency: AI overviews empower users to grasp complex topics quickly by providing summaries and categorized information.

- Improved User Experience: The organization and presentation of information with AI overviews lead to a more user-friendly and intuitive search experience.

- Deeper Understanding: By drawing insights from various sources, AI overviews can provide a more comprehensive perspective on search topics.

PaliGemma: Unveiling a Powerful Open-Source Vision Language Model

Google I/O 2024 wasn't just about advancements in text-based AI; it also unveiled a groundbreaking new visual language model (VLM) called PaliGemma. This open-source model marks a significant step forward in computer vision and natural language processing, empowering developers and researchers to explore new frontiers in AI-powered applications.

Understanding the Visual World:

PaliGemma excels at analyzing and understanding visual information. Here are some of its key capabilities:

- Image Description: Provide PaliGemma with an image, and it will generate a detailed description of the content. Imagine using PaliGemma to understand a complex scientific diagram or get a clear picture of what's happening in a blurry vacation photo.

- Text Recognition and Understanding: PaliGemma can not only decipher text within images but also understand its meaning within the broader context. This allows it to answer questions like "What are the store hours on this sign?" or "What language is this document written in?"

- Object Recognition and Segmentation: PaliGemma can identify individual objects within an image and distinguish them from the background. This opens doors for various applications, such as image tagging, content moderation, or self-driving car development.

Open-Source Accessibility: Empowering the AI Community

A crucial aspect of PaliGemma's announcement is its open-source nature. This means the underlying code and architecture are freely available for anyone to access, modify, and build upon. Here's how this benefits the AI community:

- Faster Innovation: By making PaliGemma open-source, Google fosters collaboration and innovation within the AI research community. Developers can build upon PaliGemma's foundation to create even more powerful and versatile VLM applications.

- Reduced Barriers to Entry: The open-source model makes VLM technology more accessible to developers of all backgrounds and resource levels. This can lead to a wider range of creative VLM applications being developed.

Availability and Getting Started:

The announcement highlighted several ways to access and experiment with PaliGemma:

- Public Availability on Multiple Platforms: PaliGemma is readily available for download on GitHub, Hugging Face, Kaggle, and Vertex AI Model Garden. This allows developers to choose the platform that best suits their needs.

- Pre-Trained Model for Immediate Use: A pre-trained PaliGemma model is available, allowing developers to start experimenting and building applications right away without needing to train the model from scratch. This significantly reduces development time.

- Collaboration with NVIDIA: Google has partnered with NVIDIA to optimize PaliGemma for NVIDIA GPUs. This ensures smooth performance for developers utilizing NVIDIA hardware for their projects.

A Catalyst for VLM Innovation

While the initial announcement at Google I/O 2024 focused on the core functionalities of PaliGemma, the emphasis on its open-source nature suggests exciting advancements to come.

PaliGemma serves as a powerful foundation upon which developers can build, leading to a future filled with innovative VLM applications that shape the way we interact with the visual world around us.

Conclusion

Google I/O 2024 concluded with a sense of optimism and excitement about the future of technology. The conference showcased a plethora of groundbreaking advancements in various fields, from the mind-blowing capabilities of Imagen 3 and Veo to the powerful on-device AI with Gemini Nano on Android. Here are the key takeaways that paint a picture of what's to come:

- The Generative AI Revolution: Google I/O 2024 firmly established generative AI as a transformative force. Models like Imagen 3 and Veo empower users to create stunning images and videos based on simple text prompts, democratizing creative expression and streamlining content creation workflows.

- A More Helpful Search Experience: The integration of AI overviews in Search promises a significant shift in how we interact with information. By providing summaries and insights tailored to individual needs, AI overviews empower users to grasp complex topics with greater efficiency.

- AI on the Edge: On-Device Processing with Gemini Nano: The introduction of Gemini Nano on Android devices signifies a shift towards on-device AI processing. This not only improves privacy but also paves the way for faster and more responsive AI experiences on our mobile companions.

- A Brighter Future for Developers: Google's commitment to open-source initiatives like PaliGemma fosters collaboration and innovation within the AI community. This openness empowers developers of all levels to contribute to the advancement of AI technology and build upon the powerful foundations laid at Google I/O 2024.

FAQ

1. What was the biggest announcement at Google I/O 2024?

Generative AI Advancements: The unveiling of Imagen 3 for image generation and Veo for video creation based on text prompts grabbed a lot of attention.

These models represent a significant leap forward in AI-powered creative expression.

On-Device AI with Gemini Nano on Android: This announcement signifies a major shift for Android users.

Gemini Nano brings AI processing directly to your phone, enabling faster AI experiences, improved privacy, and exciting new features like multimodal understanding through sights and sounds.

2. How can I access the presentations and resources from Google I/O 2024?

Most of the keynote addresses, technical sessions, and other presentations are now available to watch online on Google IO. You can also find blog posts, codelabs, and other resources related to the announcements on the Google Developers Blog and the Android Developers Blog.

3. When will the new features be available to the public?

The availability of the announced features varies depending on the specific technology. Here's a general breakdown:

Generative AI Models (Imagen 3, Veo): Public access details are still under development. Some limited access programs might be offered initially, with broader availability likely coming in the future.

On-Device AI with Gemini Nano on Android: This feature is expected to roll out on Pixel phones later in 2024, with expansion to other Android devices likely following in the future.

Open-Source Projects (PaliGemma): These projects are readily available for download and experimentation on platforms like GitHub and Hugging Face.

Remember to stay tuned to Labellerr's Blogs and Google's official channels for the latest updates on availability timelines for all the exciting announcements from Google I/O 2024.

References

Simplify Your Data Annotation Workflow With Proven Strategies

.png)