Fine-Tuning Segment Anything Model (SAM)

Learn how to fine-tune Segment Anything Model (SAM) for precise image segmentation tasks. Discover techniques like one-shot learning to fine-tune SAM efficiently and improve performance on specific datasets, ensuring high accuracy with limited data.

Table of Contents

- Key Features of SAM

- Significance of Fine-Tuning

- One Shot Fine-Tuning Approach

- How to Fine-Tune Segment Anything Model (SAM) with One-Shot Learning

- FAQ

The Segment Anything Model (SAM) is a cutting-edge AI tool designed to revolutionize image segmentation tasks.

By dividing images into meaningful segments, SAM enhances object detection and analysis, making it invaluable across industries such as medical imaging, autonomous driving, and more.

However, to maximize its potential for specific applications, fine-tuning SAM is essential.

In this blog, we’ll explore the importance of fine-tuning SAM, delve into the one-shot learning approach for efficient fine-tuning, and guide you through the process to improve performance on specialized datasets.

Whether you're working with limited data or seeking high accuracy, this guide will show you how to fine-tune SAM for optimal results.

Key Features of SAM

1. Versatile Image Segmentation

SAM is highly versatile and can be applied to various of image segmentation tasks.

Whether it's segmenting objects in everyday photographs, medical scans, or satellite imagery, SAM delivers high accuracy and efficiency.

2. High Precision

SAM's architecture is designed to deliver high precision in identifying and segmenting objects within images.

This precision is crucial in applications where even minor errors, such as in medical diagnosis or autonomous vehicle navigation, can have significant consequences.

3. Scalability

SAM is scalable, meaning it can handle large datasets and complex images without a significant loss in performance.

This scalability makes it suitable for both small-scale projects and large, data-intensive applications.

4. Adaptability

One of SAM's key strengths is its adaptability. It can be fine-tuned to improve performance on specific tasks or datasets, making it a valuable tool for specialized applications.

Significance of Fine-Tuning

Fine-tuning is adjusting a pre-trained model to better suit a specific task or dataset. For SAM, fine-tuning is particularly significant for several reasons:

1. Enhanced Performance

While SAM is highly capable out-of-the-box, fine-tuning allows it to be optimized for specific tasks.

This optimization can lead to significant improvements in performance, such as higher accuracy and faster processing times.

2. Task-Specific Customization

Different tasks often require different approaches to segmentation.

Fine-tuning SAM enables it to be customized to the unique requirements of a specific task, whether it's identifying tumors in medical images or detecting obstacles in autonomous driving scenarios.

3. Improved Generalization

The model can generalize better to new, unseen data by fine-tuning SAM on a diverse set of examples.

This means it can maintain high performance across a variety of conditions and image types.

4. Reduced Data Requirements

One of the most potent aspects of fine-tuning is its ability to achieve excellent results even with limited task-specific data.

This is particularly valuable when obtaining an extensive, annotated dataset is difficult or expensive.

One Shot Fine-Tuning Approach

Definition of One Shot Learning

One-shot learning is a machine learning paradigm where a model learns information about a category from a minimal number of examples, often just a single instance.

This contrasts with traditional methods that require extensive datasets with numerous annotated examples to achieve high performance.

In one-shot learning, the model leverages prior knowledge from previously learned tasks to quickly generalize and adapt to new tasks.

This approach mimics human learning, where people can often recognize and categorize new objects after seeing them only once.

Advantages over Traditional Fine-Tuning Methods

Efficiency

Traditional fine-tuning methods require large datasets and extensive computational resources.

One-shot learning significantly reduces the data and computational power needed, making it more efficient.

Speed

One-shot requires fewer examples, one-shot learning speeds up the training process since it requires fewer examples. This is crucial in time-sensitive applications where quick adaptation to new tasks is needed.

Resource Optimization

One-shot learning is particularly valuable when annotated data is scarce or expensive.

It maximizes the utility of limited data, making it an optimal choice for specialized or rare tasks.

Robust Generalization

One-shot learning encourages the model to generalize from limited data, potentially leading to better performance on unseen data compared to models fine-tuned on large, specific datasets.

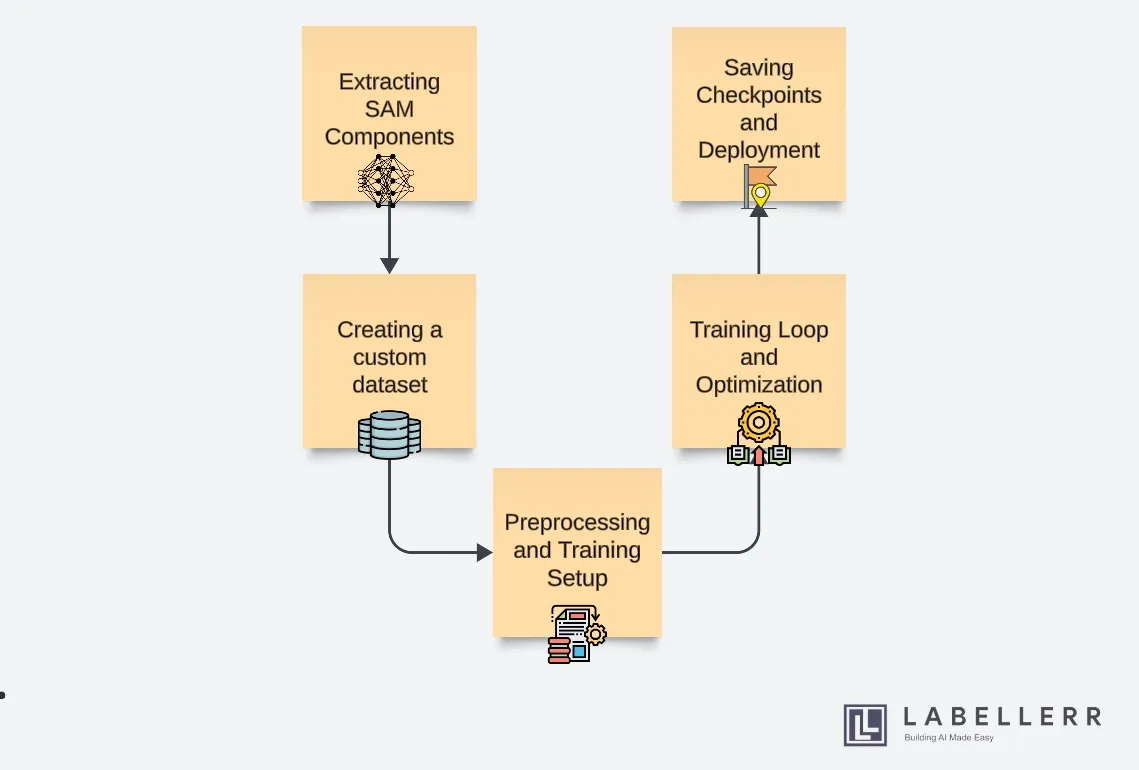

How to Fine-Tune Segment Anything Model (SAM) with One-Shot Learning

One of our clients approached us with a unique challenge, they needed to improve the segmentation of ships from aerial images using the Segment Anything Model (SAM).

By fine-tuning SAM with one-shot learning, we tailored the model to handle this specific task efficiently. These were the steps followed to integrate this fine-tuning strategy with our model.

1. Extracting SAM Components

To fine-tune SAM for the ship segmentation task, we extracted its key components: the image encoder, prompt encoder, and mask decoder.

This step allowed us to prepare the mask decoder for fine-tuning using the principles outlined in the one-shot fine-tuning approach.

2. Creating a Custom Dataset

Our client provided a dataset comprising aerial images of ships, ground truth segmentation masks, and bounding boxes around the ships.

We used platforms like Labellerr Dataview to organize and manage this data effectively. This dataset served as our foundation for applying the one-shot fine-tuning technique.

3. Preprocessing and Training Setup

To integrate the one-shot fine-tuning technique, we adapted SAM's preprocessing methods to incorporate the principles from the paper.

This involved resizing images, converting them into PyTorch tensors, and preparing them for efficient processing by the SAM model.

In the training setup, we loaded the SAM model checkpoint and configured an Adam optimizer for the mask decoder.

The one-shot fine-tuning approach guided our selection of hyperparameters and optimization strategies to maximize the model's adaptability to ship segmentation tasks.

4. Training Loop and Optimization

During the training loop, we embedded images, generated prompt embeddings using bounding box coordinates, and refined the mask decoder's parameters based on the one-shot fine-tuning principles.

We optimized the model's performance by iteratively adjusting the parameters and evaluating them against ground truth masks.

5. Saving Checkpoints and Deployment

Upon achieving satisfactory results, we saved the fine-tuned model's state dictionary.

This allows our client to deploy the model seamlessly for ship segmentation tasks in real-world applications.

FAQ

1. What is the Segment Anything Model (SAM)?

The Segment Anything Model (SAM) is a vision-based artificial intelligence model designed to segment objects within images.

It is versatile and can segment objects without the need for manual annotations by using promptable inputs such as points, boxes, or masks. This makes SAM highly effective in a variety of computer vision tasks.

2. Why is fine-tuning important for the Segment Anything Model?

Fine-tuning SAM is essential for adapting the model to specific tasks or datasets that differ from its original training data.

While SAM is pre-trained on a large and diverse dataset, fine-tuning allows the model to learn the nuances and characteristics of a specific dataset, leading to improved performance and accuracy for the target application.

3. What common steps are involved in fine-tuning the Segment Anything Model?

Fine-tuning SAM generally involves the following steps:

- Dataset Preparation: Collect and preprocess the dataset specific to the target task.

- Model Initialization: Load the pre-trained SAM weights.

- Prompt Engineering: Define the prompt inputs (e.g., points, boxes, or masks) that will guide the segmentation.

- Training: Train the model on the target dataset using the defined prompts, optimizing the model parameters.

- Evaluation: Assess the model's performance on a validation set and fine-tune hyperparameters as needed.

4. What prompts can be used to fine-tune the Segment Anything Model?

SAM can be fine-tuned using various types of prompts to guide the segmentation process. These include:

- Point Prompts: Specific points on the object to be segmented.

- Box Prompts: Bounding boxes around the object.

- Mask Prompts: Initial masks that roughly outline the object. Choosing the appropriate prompt type depends on the task and the nature of the objects to be segmented.

5. What are the challenges in fine-tuning the Segment Anything Model?

Fine-tuning SAM can present several challenges, including:

- Dataset Quality: Ensuring the dataset is well-annotated and representative of the target domain.

- Computational Resources: Fine-tuning large models like SAM requires significant computational power and memory.

- Overfitting: Avoiding overfitting to the fine-tuning dataset, which can reduce the model's generalizability.

- Prompt Selection: Selecting and designing effective prompts that accurately guide the segmentation process for the target application.

References

Simplify Your Data Annotation Workflow With Proven Strategies

.png)