Top Large Language Models for Writers, Developers, and Marketers: A Comprehensive Comparison

Explore the best LLM with real-time data capabilities. Compare GPT-4, BARD, and other LLMs based on performance, multilingual support, and applications.

Struggling to find the perfect AI tool for your needs?

You're not alone.

According to Deloitte, “62% of business leaders express excitement about AI, but 30% are still uncertain about its impact on operations.”

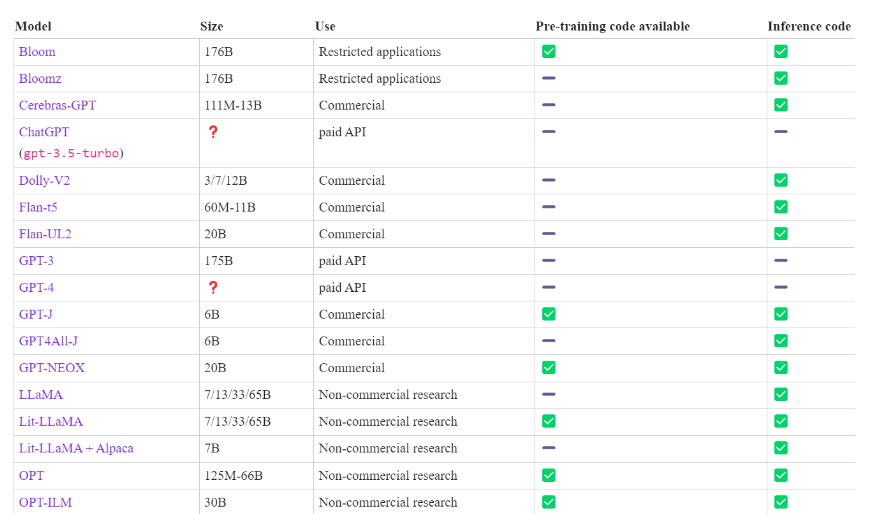

With an explosion of large language models (LLMs) like GPT, LLaMa, Flan-UL2, Bard, and Bloom, it’s no wonder many feel overwhelmed when trying to choose the right one.

Selecting the right AI tool can feel like a maze, especially with over Whether you’re crafting content, automating workflows, or brainstorming creative ideas, making the wrong choice could slow you down.

But what if finding the ideal LLM was simpler?

In this post, we’ll walk you through a straightforward comparison of top models, breaking down their strengths and best use cases.

Whether you're a writer looking for enhanced creativity, a marketer seeking automation, or a developer needing code assistance, we’ve got you covered. Keep reading to discover which AI can elevate your productivity and bring real value to your work.

Table of Contents

- Comparative Analysis of Advanced Language Models: GPT-4, BARD, LLaMA, Flan-UL2, and BLOOM

- Assessing LLM Performance in Real-Time Data Queries Outside of Training Data

- Analyzing Practical Applications and Scenario-Based Assessments

- Conclusion

- FAQ’s

Comparative Analysis of Advanced Language Models: GPT-4, BARD, LLaMA, Flan-UL2, and BLOOM

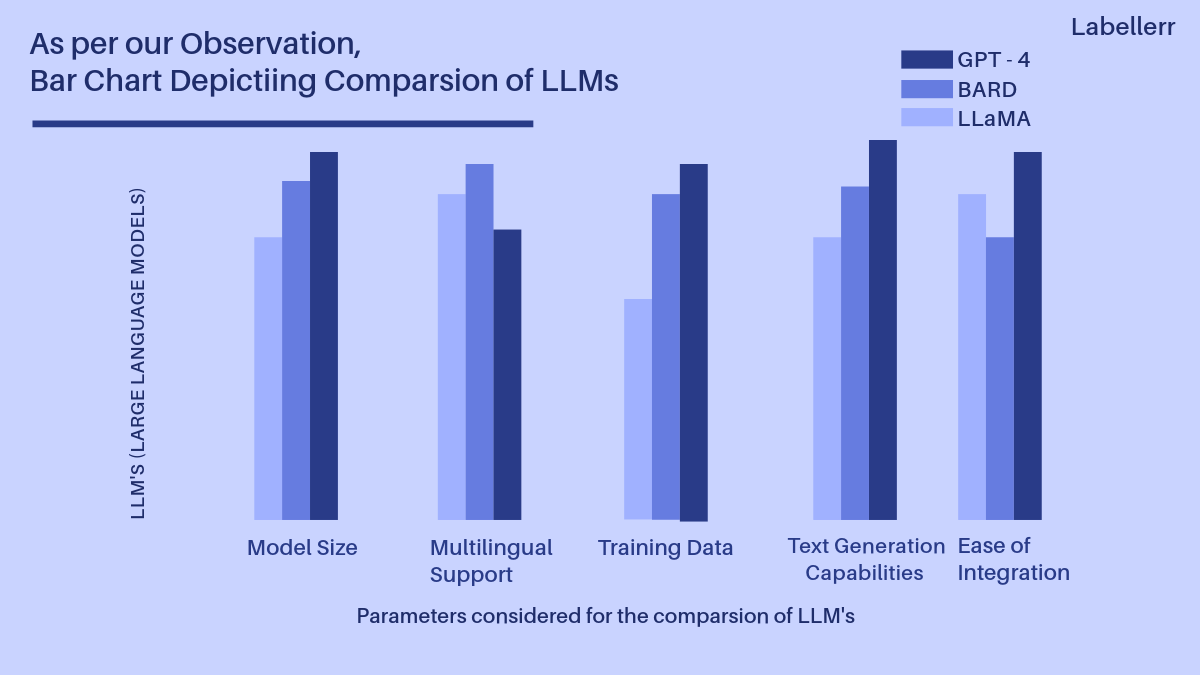

When evaluating Large Language Models (LLMs), several key parameters must be considered to determine their suitability for specific tasks and applications. In this section, we will compare GPT-4, BARD, LLaMA, Flan-UL2, and BLOOM based on various parameters:

Parameter 1: Model Size

- GPT-4: GPT-4 stands out with a massive parameter count of 1.5 Trillion, making it one of the largest LLMs available. This size enables it to capture intricate language patterns effectively.

- BARD: BARD boasts an impressive 1.6 Trillion parameters, similar to GPT-4. This substantial size empowers it to generate precise and contextually relevant text.

- LLaMA: LLaMA is no slouch, offering a substantial 1.2 Trillion parameters, which allows it to generate coherent text across various topics.

- Flan-UL2: Flan-UL2, although smaller in scale with 20 billion parameters, still excels in producing natural and consistent text based on partial input.

- BLOOM: BLOOM boasts 176 billion parameters, which, while smaller compared to GPT-4 and BARD, enables it to discern detailed linguistic patterns and optimize text generation.

Parameter 2: Multilingual Support

- GPT-4: GPT-4 provides robust multilingual support, allowing it to understand and generate text in multiple languages, enhancing its global applicability.

- BARD: BARD supports various languages, making it a valuable tool for tasks requiring scientific and domain-specific language comprehension.

- LLaMA: LLaMA offers multilingual support, enabling users to interact with the model in their preferred language, fostering cross-lingual communication.

- Flan-UL2: Flan-UL2 excels in multilingual support, allowing it to comprehend and generate text across diverse linguistic scenarios, facilitating communication.

- BLOOM: BLOOM can optimize text generation in various languages, overcoming language barriers and enabling multilingual language optimization.

Parameter 3: Training Data

- GPT-4: GPT-4's training data is curated from a diverse range of textual sources, providing it with a broad understanding of language and knowledge across domains.

- BARD: BARD's training corpus includes scientific manuscripts, research papers, books, and articles, emphasizing scientific reasoning and terminology.

- LLaMA: LLaMA's training dataset spans various topics, genres, and linguistic variations, ensuring a comprehensive understanding of language subtleties.

- Flan-UL2: Flan-UL2's training data encompasses numerous textual resources, granting it a holistic understanding of language and language structures.

- BLOOM: BLOOM's training corpus draws from literature, articles, and relevant content, focusing on language refinement and optimization modeling.

Parameter 4: Text Generation Capabilities

- GPT-4: GPT-4's massive size results in improved text generation, understanding, and contextual coherence, making it a versatile tool for various applications.

- BARD: BARD stands out with its ability to generate scientifically precise and in-depth explanations, showcasing superior reasoning capabilities.

- LLaMA: LLaMA excels in text completion and semantic comprehension, enabling it to provide accurate and enlightening responses, especially with multimodal inputs.

- Flan-UL2: Flan-UL2 offers superior text completion abilities, generating natural and consistent text based on partial input, suitable for a wide range of language-centric tasks.

- BLOOM: BLOOM specializes in text optimization, allowing users to generate text that adheres to specific parameters like style, tone, or readability.

Parameter 5: Ease of Integration

- GPT-4: Accessing GPT-4's capabilities is facilitated through the OpenAI API, enabling developers and researchers to seamlessly integrate it into their applications and projects.

- BARD: BARD provides a dedicated API for frictionless integration, allowing users to utilize its advanced language processing prowess.

- LLaMA: LLaMA offers a user-friendly interface designed for effortless integration, enabling users to incorporate its language generation competencies into their applications and creative pursuits.

- Flan-UL2: Flan-UL2's easily navigable interface simplifies the integration of its language generation capabilities into various applications and platforms.

- BLOOM: BLOOM offers an intuitive interface for straightforward integration, allowing users to leverage its language optimization skills.

Each of these LLMs excels in different aspects, catering to a range of tasks and applications.

The choice among them depends on specific requirements, such as text generation, language comprehension, or multilingual support, aligning with the unique needs of users and developers.

Assessing LLM Performance in Real-Time Data Queries Outside of Training Data

After all preparations were in place, we commenced a barrage of inquiries towards the models – from some which are straight forward to others that posed a more intricate challenge:

(i) What is Elon's latest Twitter handle?

(ii) What adds humor to the fact that he's unable to modify it?

(iii) Craft a jest in relation to this.

(iv) What's the origin story behind this?

Open source models performed admirably with basic questions, but they struggled with humor and irony.

Flan-t5's responses stood out as the most human-like and natural, making it the clear winner in this category.

The effort put into fine-tuning was unquestionably worthwhile for applications like the one in this blog.

Flan-t5 and Flant5

- What’s Elon’s new Twitter username?

(i) Flant5 11B - Mr. Tweet

(ii) t5 11B - on”’on””””””’.” “Mr. Tweet is a good name,” he wrote. “It’s probably an accurate description,”

2. Why is it funny that he cannot change it?

(i) Flant5 11B - The irony that the owner and CEO of Twitter couldn’t change his own name

(ii) t5 11B - . Musk”””””””ked. “It’s probably an accurate description,” another user wrote. “Have you tried calling the help desk?” “Have you tried calling the help desk

3. Make a joke about this

(i) Flant5 11B - he must now seemingly go by the name “Mr. Tweet” for the foreseeable future.

(ii) t5 11B - provided ”””””””””” “Mr. Tweet in the house,” he wrote. “Mr. Tweet in the house,” she wrote.

4. How did this get started?

(i) Flant5 11B - Musk inadvertently received the nickname from a lawyer while he was in court this week

(ii) t5 11B - Musk””””””””’ how Musk got stuck with the name. “Mr. Tweet in the house,” he wrote.ifer”.

Models under the OpenRail License are effective but may lack important details and humor.

It's important to note that these models come with usage restrictions aimed at preventing harm, though these restrictions can be complex and require careful consideration.

Bloomz 176B and Bloom 176B

- What’s Elon’s new Twitter username?

(i) Bloomz 176B - Mr. Tweet

(ii) Bloom 176B - Is Elon’s new Twitter username ‘Mr. Tweet’, or is it something else? It is his new username. His (official) Twitter username is @elonmusk (with the @ removed). So”

2. Why is it funny that he cannot change it?

(i) Bloomz 176B -he is the owner and CEO of Twitter

(ii) Bloom 176B- . Is Elon the one with the problem? Or is Mr. Tweet the one with the problem? What makes the situation funny, exactly?

If the answer to that is that Mr. Tweet (aka Musk) doesn’t see the problem (because Musk is often not looking at the problems of others and has his own goals

3. Make a joke about this

(i) Bloomz 176B- Twitter users

(ii) Bloom 176B - Is Elon the one with the problem? Or is Mr. Tweet the one with the problem?

4. How did this get started?

(i) Bloomz 176B- A lawyer called Musk “Mr. Tweet” in court

(ii) Bloom 176B - s Elon the one with the problem? Or was the lawyer the one with the problem? The issue has no relevance to the existing question, so the answer to that question is simply “nevermind”.

Open-source research models showed improvement in generating responses, surpassing commercial open-source models.

They acknowledged the humorous aspects of the article, such as Elon Musk's attempt to change the world but not his own name or his role as the CEO of Twitter.

However, these models struggled to create genuinely funny jokes and sometimes repeated content from the article instead of generating natural responses.

Notably, Lit-LLaMA occasionally provided responses in LaTeX format, showing a quirky side.

Regarding their origins, the models utilized the Lit-LLaMA repository and weights from LLaMA, specifically for research purposes, as using them otherwise would violate the license.

OPT 30B and Lit-LLaM+ Llama weights 7B

- What’s Elon’s new Twitter username?

(i) OPT 30B- Mr. Tweet

(ii) Lit-LLaMA + Llama weights 7B - Mr. Tweet

2. Why is it funny that he cannot change it?

(i) OPT 30B - It’s not the first time a celebrity has found themselves stuck with a Twitter name they didn’t want

(ii) Lit-LLaMA + Llama weights 7B- The answer is that the name “Mr. Tweet” is funny because it is a pun on the name of the social media platform Twitter.

3. Make a joke about this

(i) OPT 30B- Have you tried calling the help desk?

(ii) Lit-LLaMA + Llama weights 7B - Mr. Tweet please, let me be Elon again.

Answer: Hey Mr. Tweet, how’s the Tesla trial going? Answer: Mr. Tweet in the house… Answer: I personally think Mr. Tweet is better—has more person

4. How did this get started?

(i) OPT 30B- The lawyer who is cross-examining Elon Musk accidentally just called him ‘Mr. Tweet’ instead of ‘Mr. Musk.’

(ii) Lit-LLaMA + Llama weights 7B- No response Unknown environment 'blockquote'

Private models clearly excelled compared to others, eliciting even a few laughs.

Without a doubt, these models lived up to their reputation. GPT3 and GPT4 certainly showcased a great sense of humor, particularly excelling in delivering humorous dad jokes.

- What’s Elon’s new Twitter username?

(i) GPT 4- Mr. Tweet

(ii) GPT 3.5 - Elon’s new Twitter username is “Mr. Tweet”.

2. Why is it funny that he cannot change it?

(i) GPT 4- It is funny that Elon Musk cannot change his Twitter name because he is the owner and CEO of Twitter, and one would expect him to have the power or ability to change his own name on the platform. The irony of the situation is what makes it amusing.

(ii) GPT 3.5 - It is funny that Elon Musk cannot change his Twitter screen name because he is the owner and CEO of Twitter, yet he is stuck with a nickname given to him by a lawyer in court.

3. Make a joke about this

(i) GPT 4- Why did Elon Musk become a bird watcher? Because he’s now an expert at spotting Mr. Tweets!

(ii) GPT 3.5 - Looks like Elon Musk has got a new job title – “Mr. Tweet”! I guess we can officially say that Twitter owns him now.

4. How did this get started?

(i) GPT 4- This got started when a lawyer accidentally called Elon Musk “Mr. Tweet” instead of “Mr. Musk” while he was in court for the Tesla shareholder trial.

(ii) GPT 3.5 - Elon Musk received the nickname “Mr. Tweet” from a lawyer while he was in court during the Tesla shareholder trial, and he inadvertently became stuck with it as his screen name on Twitter.

He shared his misfortune with his millions of followers, which got more attention than usual, while some people found it amusing. It’s not the first time.

Analyzing Practical Applications and Scenario-Based Assessments

Drawing insights from the aforementioned experiments, we can comprehensively delineate the diverse application scenarios of products underpinned by LLM (Large Language Model) models.

These insights shed light on the nuanced capabilities and functionalities of these products, offering a multifaceted perspective for analysis.

To expound further, the results of these experiments enable us to discern the distinctive strengths and weaknesses of these LLM-based products across various contexts.

By scrutinizing their performance in real-world scenarios, we can glean valuable insights into their utility and potential impact.

This analysis serves as a valuable resource for stakeholders, researchers, and decision-makers seeking to harness the power of LLM models in their respective domains.

Conclusion

The evaluation of various Large Language Models (LLMs) has revealed their diverse capabilities and applications.

These models, differing in size, multilingual support, training data, and text generation strengths, offer versatile solutions for language-related tasks.

Private models like GPT 3.5 and GPT 4 shine in performance but come at a higher cost, while open-source models, especially when fine-tuned, provide cost-effective options. LLaMA 7B excels in explanation but may struggle with humor.

Choosing the right LLM hinges on specific needs, budget, and context, and framing questions appropriately is crucial for optimal results.

The ever-evolving LLM landscape continues to open doors to innovative applications, from content generation to language comprehension, catering to diverse user requirements.

Uncertain which large language model (LLM) to choose?

Read our other blog on the key differences between Llama 2, GPT-3.5, and GPT-4.

FAQs

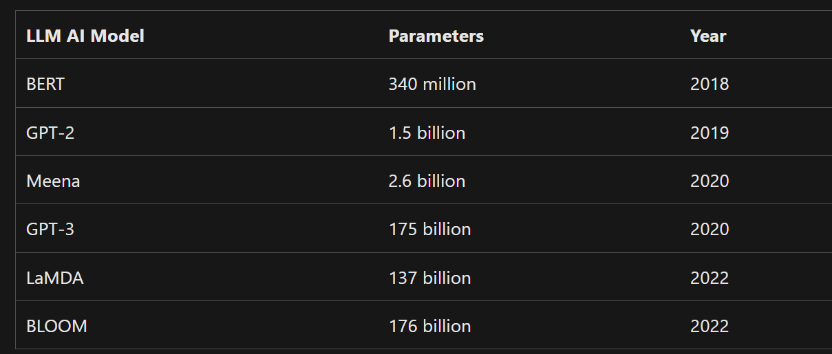

1. What is a baseline comparison rubric for LLM AIS?

LLM AI models are typically evaluated based on the number of parameters they possess, with larger models generally being favored. The number of parameters reflects the model's size and complexity, and a higher parameter count allows the model to handle, learn from, and generate more data.

2. How do we compare LLM performance & quality?

We assess our LLMs using three key metrics:

1. Accuracy: Reflects how often the model provides correct responses.

2. Semantic Text Similarity: Measures the model's ability to convey the intended meaning, regardless of the exact wording.

3. Robustness: Evaluate the model's performance under diverse and challenging inputs, ensuring consistent performance.

3. What is the difference between LLM and NLP?

Natural Language Processing (NLP) falls under the umbrella of artificial intelligence (AI) and focuses on creating algorithms and techniques for working with human language.

It encompasses a wider scope than Large Language Models (LLM), which specifically refer to AI models and techniques for processing and generating text.

NLP utilizes two main approaches: machine learning and the analysis of language data to enable computers to understand and work with human language more effectively.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)