Is Your AI Smart Enough? Test It with ARC AGI v2!

ARC AGI V2 tests AI reasoning with abstract tasks that go beyond memorization. It evaluates how well models recognize patterns, solve problems, and generalize knowledge.

For years, AI models impressed us by scoring high on specific tests. But acing tests on known data doesn't mean an AI can truly think. We faced an illusion of intelligence, where AI often mimicked understanding rather than possessing it.

Many AI models became good at repeating patterns found in their massive training data – like "stochastic parrots."

They aced narrow benchmarks but failed when faced with genuinely new problems.

We needed a test that measured real problem-solving ability, not just pattern repetition. ARC-AGI-2 was designed specifically to test fluid intelligence – the power to adapt and solve tasks you have never seen before.

What Problem Does ARC-AGI-2 Solves?

Launched on March 24, 2025, ARC-AGI-2 directly tackles this evaluation crisis. Building on François Chollet’s vision to measure AI's efficiency in learning new skills, ARC-AGI-2 provides a clear test.

It measures not just if an AI can solve a novel problem (capability), but also how efficiently it does so (cost per task).

It acts as a litmus test for fluid intelligence by presenting tasks easy for humans but baffling for current AI, revealing core gaps in reasoning.

What Makes ARC-AGI-2 Unique?

Core Mechanics

The test uses puzzles based on grids (from 1x1 up to 30x30) with colored squares (up to 10 colors).

AI must look at a few input-output examples, figure out the hidden transformation rule through abstraction, and apply it correctly to a new input grid.

This often requires zero-shot generalisation from minimal examples.

Human Validation

Every single task in the calibrated ARC-AGI-2 evaluation sets has been solved by at least two different humans in two attempts or less during controlled studies.

This confirms the tasks rely on general reasoning, not tricks.

Efficiency Metric

A key difference from ARC-AGI-1, this version tracks cost per task, rewarding solutions that are not just correct but also resource-efficient.

Resistance to Brute Force

The design prevents AI models from simply using massive computing power to guess or exhaustively search for answers. Real understanding is required.

Focus on Fluid Intelligence

The core aim is testing the AI's ability to adapt and reason when faced with completely novel situations it hasn't been trained on.

Tasks That Break Modern AI

ARC-AGI-2 targets specific areas where even the most advanced AI systems currently fail:

- Symbolic Interpretation: Understanding that symbols on the grid mean something more than just their visual pattern.

- Compositional Reasoning: Applying multiple rules at the same time, especially when those rules interact with each other.

- Context-Sensitive Rules: Figuring out that a rule needs to be applied differently depending on the situation or context within the grid.

These challenges explain why even powerful Large Language Models (LLMs) like GPT-4.5 and Claude 3.7 score 0% on ARC-AGI-2. They lack the deep, flexible reasoning needed for these abstract visual puzzles.

How to Test Your Model with ARC-AGI-2

Evaluating your model involves participating in the official ARC Prize 2025 competition on Kaggle.

Step-by-Step Evaluation Guide

- Step 1: Access Resources: Get the ARC-AGI-2 datasets (especially the public training and evaluation sets) and carefully read the official rules on the ARC Prize website and Kaggle page (ARC Guide).

- Step 2: Run Offline Evaluation: You must run your model within the Kaggle notebook environment.

Crucially, notebooks have no internet access during evaluation runs. Your model must work entirely self-contained.

The system enforces strict compute limits (roughly $0.42 cost per task). - Step 3: Submit via Kaggle: Submit your solution code through the Kaggle Competition platform between March 26 and November 3, 2025.

Kaggle automatically runs your code against hidden test sets (Semi-Private for live leaderboard, Private for final ranking) and calculates your score using the pass@2 method (allowing two attempts per task).

ARC AGI Leaderboard and Performance

The Kaggle leaderboard shows current standings based on accuracy on the Semi-Private set. However, the performance gap revealed by ARC-AGI-2 is the real story.

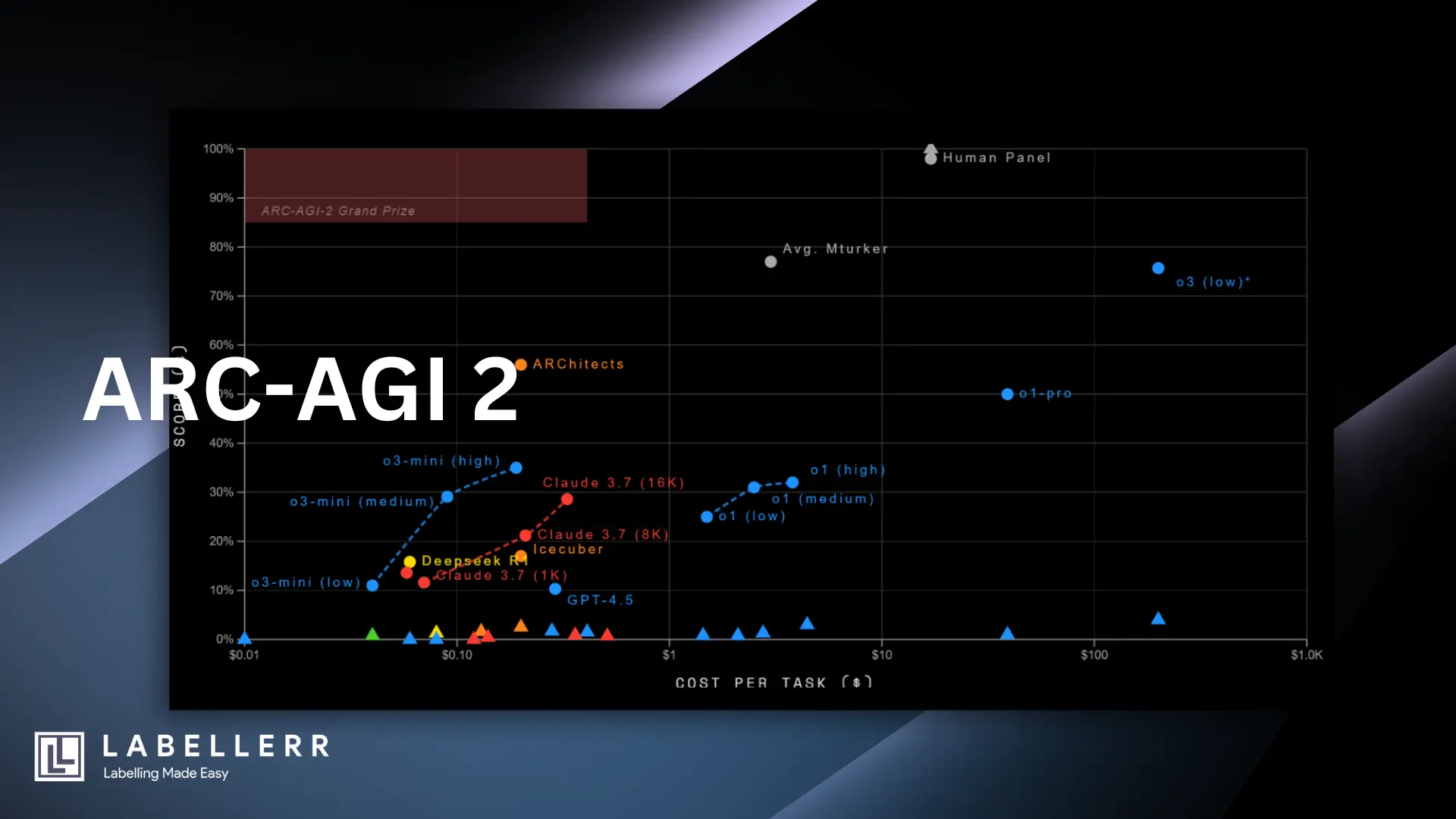

Look at this comparison:

The graph clearly shows: Humans solve the tasks reliably. Pure LLMs completely fail on ARC-AGI-2.

Even the best specialized AI reasoning systems score below 4%, often with very high compute costs. Efficiency is a major hurdle alongside raw capability.

ARC AGI V2 vs. Other Reasoning Benchmarks

How does ARC AGI V2 compare to other tests claiming to measure AI reasoning?

Battle of the Benchmarks

- ARC vs. GAIA (from Meta): ARC focuses on abstract reasoning using diverse, novel puzzle types. GAIA aims for reasoning grounded in more real-world contexts and multimodal information.

- ARC vs. BIG-bench: ARC targets deep, flexible reasoning on unfamiliar problems. BIG-bench covers a broader range of tasks, many testing specific knowledge or narrower reasoning skills.

- ARC vs. Human Exams (like MMLU): ARC tests the ability to solve truly new problems (fluid intelligence).

Benchmarks like MMLU often test knowledge learned from training data, closer to testing memorization or "crystallized intelligence".

Why ARC AGI V2 is the Gold Standard

ARC AGI V2 stands out because it directly tests the core traits many researchers believe are essential for Artificial General Intelligence (AGI):

- Abstraction: Seeing beyond surface details to grasp underlying concepts.

- Reasoning: Applying those concepts logically to solve problems.

- Adaptation: Learning and adjusting quickly based on minimal new information.

By focusing purely on these skills in a novel context, ARC provides a cleaner signal of genuine reasoning ability.

Building a Competitive Model for ARC AGI V2

Developing an AI that excels at ARC AGI V2 requires different approaches than standard deep learning.

Architectures That Work

Promising research directions include:

- Neuro-Symbolic Fusion: Combining the pattern-recognition strengths of neural networks (like transformers) with the logical rigor of symbolic AI systems (like logic engines or rule-based systems).

- Program Synthesis: Designing AI that writes small pieces of code (programs) to represent the transformation rule needed to solve each task.

DeepMind's work on AlphaCode showed the potential of code generation for problem-solving. - Self-Supervised Pretraining on Synthetic Tasks: Training models beforehand on a vast number of automatically generated, ARC-like tasks.

This helps them learn general reasoning heuristics before tackling the official benchmark.

Pitfalls to Avoid

Teams should be careful about:

- Overfitting to Public Tasks: Do not just train your model to memorize solutions to the publicly available ARC tasks.

The private test set will contain entirely new puzzle types. Focusing too much on the public leaderboard score can be a trap. - Ignoring Compute Limits: Your solution must be efficient. Submissions that require excessive computational power (beyond what typical consumer GPUs can handle within the time limits) will be disqualified. Design for efficiency from the start.

Lessons from Top Performers

Learning from past successes and failures is key.

Team Spotlights

Top teams often showcase innovative strategies:

- Hybrid Masters: Some combine the broad knowledge of LLMs (perhaps to suggest possible rules) with precise, rule-based solvers or constraint satisfaction systems to verify and execute the solution.

- Code-First Innovators: Others focus on generating Python code (or a similar symbolic representation) that directly models the grid transformations observed in the examples.

Failure Analysis

Understanding why models fail is just as important:

- Why Pure LLMs Struggle: As mentioned, models like GPT-4 often lack the innate spatial reasoning and strong abstraction capabilities needed for ARC's visual grid tasks.

They are trained primarily on text, not abstract visual logic from few examples. - The Cost of Brute-Force: Simple approaches like trying every possible transformation (exhaustive search) quickly become computationally impossible for most ARC tasks due to the vast number of potential rules. Efficiency matters.

The Future of AI Evaluation

ARC AGI V2 is a critical step, but the evaluation of AI reasoning will continue to evolve.

Beyond ARC AGI V2

Future benchmarks might incorporate:

- Multimodal Reasoning: Tasks involving text, images, sound, and even basic physics understanding simultaneously.

- Embodied AI Challenges: Testing reasoning in simulated or real robots that need to interact with their environment to solve problems.

Ethical Implications

As AI gets better at abstract reasoning, we must consider the implications:

- Risks of Superhuman Abstraction: What happens if AI systems significantly outperform humans in core reasoning abilities? Understanding and managing these powerful systems becomes crucial.

- Focus on Transparency: The ARC Prize emphasizes openness. Requiring code submissions and promoting reproducible results helps the community understand how successful models work, which is vital for responsible development.

How to Get Started Today

You can jump into the ARC-AGI-2 challenge now.

Beginner’s Toolkit

- Join the Kaggle competition (runs until Nov 3, 2025).

- Download the public training (1000 tasks) and evaluation (120 tasks) sets.

- Study the compute constraints (~$0.42/task, no internet).

- Explore starter notebooks on Kaggle and community resources (forums, Discord).

Pro Tips

- Focus on building models that can infer patterns and rules efficiently without prior exposure to highly similar examples.

- Use the interactive ARC Playground online to build intuition.

- Analyze the specific failure modes mentioned (Symbolic Interpretation, Compositional Reasoning, Context-Sensitivity).

Is Your Model Ready?

ARC-AGI-2 serves as a stark "reality check." The massive performance gap between humans (100% on calibrated tasks) and the best AI (<4%) proves we are still far from achieving Artificial General Intelligence.

As François Chollet highlighted, ARC-AGI-2 is a better measure of intelligence than its predecessor because it emphasizes efficiency and resists brute force. It suggests that simply scaling current AI models won't be enough.

The challenge is clear. Testing your model against ARC-AGI-2 shows you exactly where it stands on the path toward true reasoning ability. Can your model bridge the gap?

FAQs

What is ARC AGI V2?

ARC AGI V2 is an advanced benchmark designed to test and evaluate the reasoning capabilities of AI models, focusing on abstraction and problem-solving skills.

How does ARC AGI V2 measure reasoning?

It assesses AI models by presenting complex tasks that require logical thinking, pattern recognition, and adaptability rather than just memorization.

Why is ARC AGI V2 important for AI development?

It helps researchers understand if AI models can truly reason, generalize knowledge, and solve novel problems, making them more reliable for real-world applications.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)