All about deep learning models that you should know

Explore deep learning essentials, from neural network models like CNNs, RNNs, and GANs to applications in vision, language processing, and robotics. Learn how methods like transfer learning and dropout prevent overfitting for improved AI model accuracy.

In this technologically advanced world, Deep learning models have incorporated a special place along with AI and machine learning. With the deep learning models, various new techniques have been developed.

From the field of Computer vision to automated robots, everyone has been using deep learning models in some or the other way. In this blog, we have explained in detail about deep learning models, how they work, their applications, and some of the famous deep learning models.

Table of Contents

- What is Deep Learning?

- Methods of Using Deep Learning

- How Does Deep Learning Operate?

- Applications of Deep Learning Model

- Most Common Deep Learning Models

- Conclusion

What is Deep Learning?

Through the use of a machine learning approach called deep learning, computers are taught to learn by doing just like people do. Driverless cars use deep learning as a vital technology to recognize stop signs and tell a person from a lamppost apart.

Recently, deep learning has attracted a lot of interest, and for legitimate reasons. It is producing outcomes that were previously unattainable.

Deep learning is the process through which a computer model directly learns to carry out categorization tasks from images, texts, or sounds. Modern precision can be attained by deep learning models, sometimes even outperforming human ability. A sizable collection of labeled data and multi-layered neural network architectures are used to train models.

Methods of using deep learning

Robust deep-learning models can be produced using a variety of techniques. These methods include dropout, learning rate decay, transfer learning, and starting from scratch.

1. Decrease in learning pace:

The amount of change the model undergoes in reaction to the predicted error each time the model's weight is changed is controlled by the learning rate, a hyperparameter that

describes the system or establishes conditions for its functioning before the learning process. A suboptimal set of weights may be learned or unstable training processes may be the result of excessive learning rates. Too slow of learning rates could result in a drawn-out training process that might become stuck.

The technique of adjusting the learning rate to improve performance and shorten training time is known as the learning rate decay method, also known as learning rate softening or adaptable learning rates.

Techniques to slow down the learning rate over time are among the simplest and most popular modifications of the learning rate throughout training.

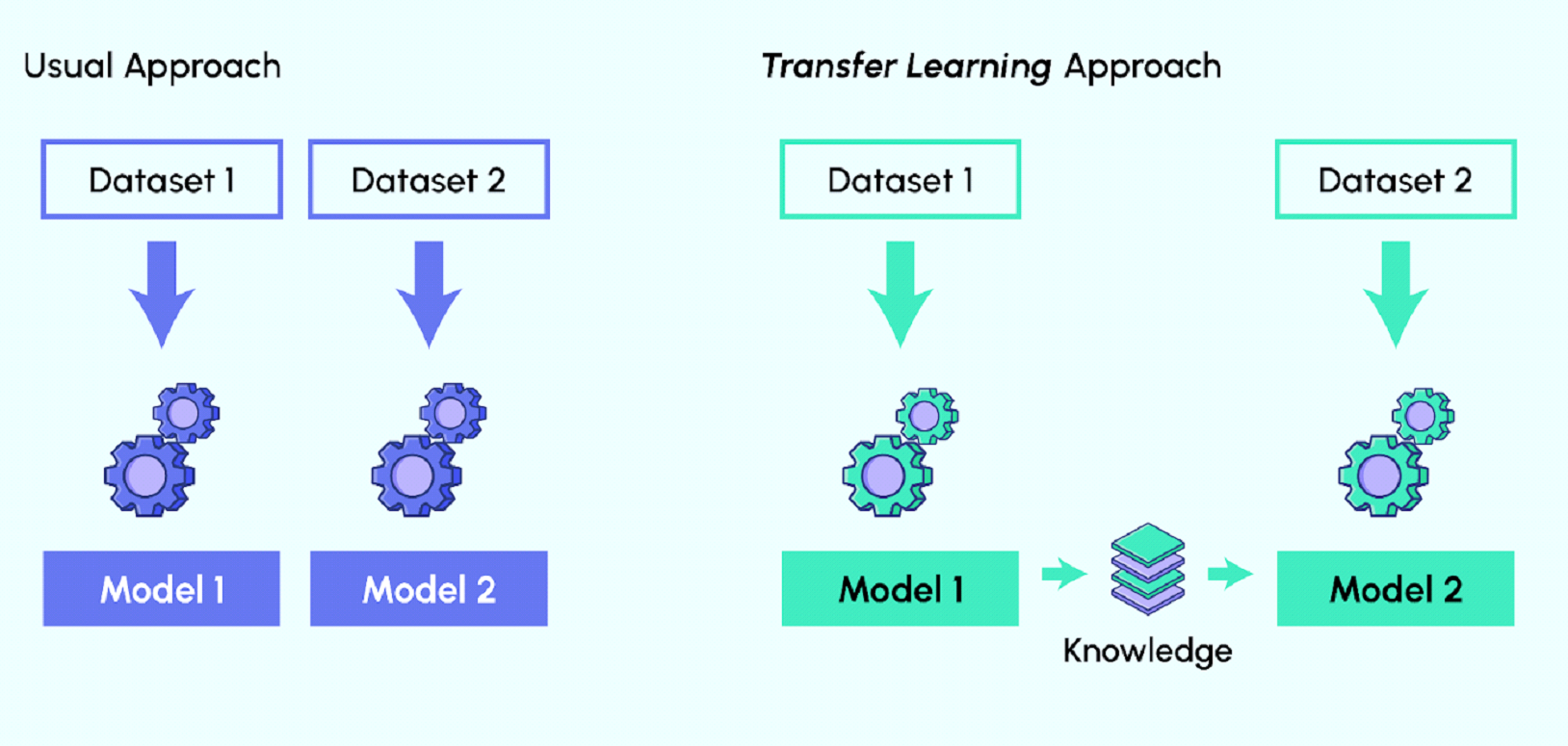

2. Transfer Learning:

Transfer Learning Approach

This technique entails refining a model that has already been trained, and it calls for access to a network's internal workings. Users first add new data including previously unidentified classifications to the already-existing network.

Once the network has been modified, new jobs can be carried out with more accurate categorization skills. The benefit of this approach is that it uses a lot less information than others, which cuts down computation time to hours or minutes.

3. Training from Scratch:

An extensive labeled data set must be gathered for this method, and a communication system that can acquire the characteristics and model must be put up. Apps with a lot of output categories and new applications can both benefit greatly from this strategy. However, because it requires a lot of data and takes days or weeks to train, it is often a less popular strategy.

4. Dropout:

This approach makes an effort to address the issue of overfitting in neural networks with a lot of variables by randomly removing units and their interconnections during training. The dropout strategy has been demonstrated to enhance neural network performance on tasks involving supervised learning in domains like speech recognition, document categorization, and computational biology.

How does Deep Learning operate?

While self-learning representations are a hallmark of deep learning models, they also rely on ANNs that simulate how the brain processes information.

In order to extract features, classify objects, and identify relevant data patterns, algorithms exploit independent variables in the likelihood function throughout the training phase. This takes place on several levels, employing the algorithms to create the models, much like training computers to learn for themselves.

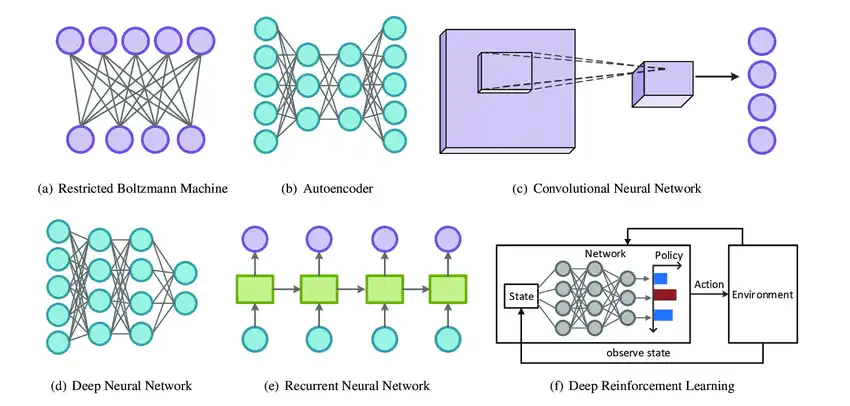

Several algorithms are used by deep learning models. Although no network is thought to be flawless, some algorithms are more effective at carrying out particular tasks. It's beneficial to develop a thorough understanding of all major algorithms in order to make the best choices.

Applications of Deep Learning Model

Here are some of the popular applications of deep learning models that you should know:

1. Computer vision

Deep learning models are used by high-end consumers quite frequently. Cutting-edge object identification, image categorization, image reconstruction, and image segmentation are made possible by deep neural networks.

So much so that they even enable the computer system to recognize hand-written digits. To put it another way, deep learning relies on a remarkable neural network to enable machines to mimic the functioning of the human visual agency.

2. Deep learning-based bots

Consider this for a moment: Nvidia researchers have created an AI system that enables robots to pick up on human demonstrative motions. Robotic house cleaners that act in response to inputs from various sources of artificial intelligence are rather prevalent.

Deep-learning infrastructures assist robots in carrying out tasks in accordance with differing AI perspectives, much like how human brains analyze actions based on prior experiences and sensory inputs.

3. Automated interpretations

Before the introduction of deep learning models, automated translations were already possible. However, deep learning is enabling machines to produce improved translations with the dependable precision that was before lacking.

Additionally, deep learning supports translation using visuals, a brand-new endeavor that would not have been conceivable with conventional text-based interpretation.

4. Customer interaction

Machine learning is already being used by many businesses to improve the customer experience. Platforms for online self-service are good examples. Additionally, a lot of businesses increasingly rely on deep learning models to develop trustworthy workflows.

The majority of us are now familiar with how businesses use chatbots. We can anticipate more advancements in this area as deep leering becomes more established.

5. Autonomous vehicles

The next time you have the good fortune to see an autonomous vehicle moving down the road, be aware that numerous AI models are active at once. Some models are good at identifying street signs, while others can pinpoint pedestrians. While traveling down the road, a single car may be receiving information from millions of AI models. Many people believe that AI-powered car rides are safer than human ones.

6. Adding colors to media

Among the most time-consuming tasks in media creation used to be adding color to black-and-white videos. However, deep learning algorithms and artificial intelligence have made it simpler than ever to add color to black-and-white pictures and films. Hundreds of monochrome images are being converted into color as you read.

7. Robots using deep learning

Robot deep learning applications are numerous and effective, ranging from amazing deep learning models that can instruct a robot just by watching a human perform a task to a janitorial robot that receives information from a number of other AIs before acting.

Deep-learning models will assist robots in performing functions based on the input of numerous diverse AI opinions, just as a human brain analyses input from previous experiences, current sources from senses, and any other data that is offered.

8. Languages recognition

We are currently looking at the early stages of deep learning machines being able to distinguish between various dialects. When someone speaks in English, for instance, a system will determine that. Then it will differentiate based on dialect.

Once the language has been determined, additional processing will be done by a different AI that is knowledgeable about the specific language. Not to add, none of these stages requires the involvement of a human.

Most common Deep Learning Models

Now, let's explore some of the top deep-learning models:

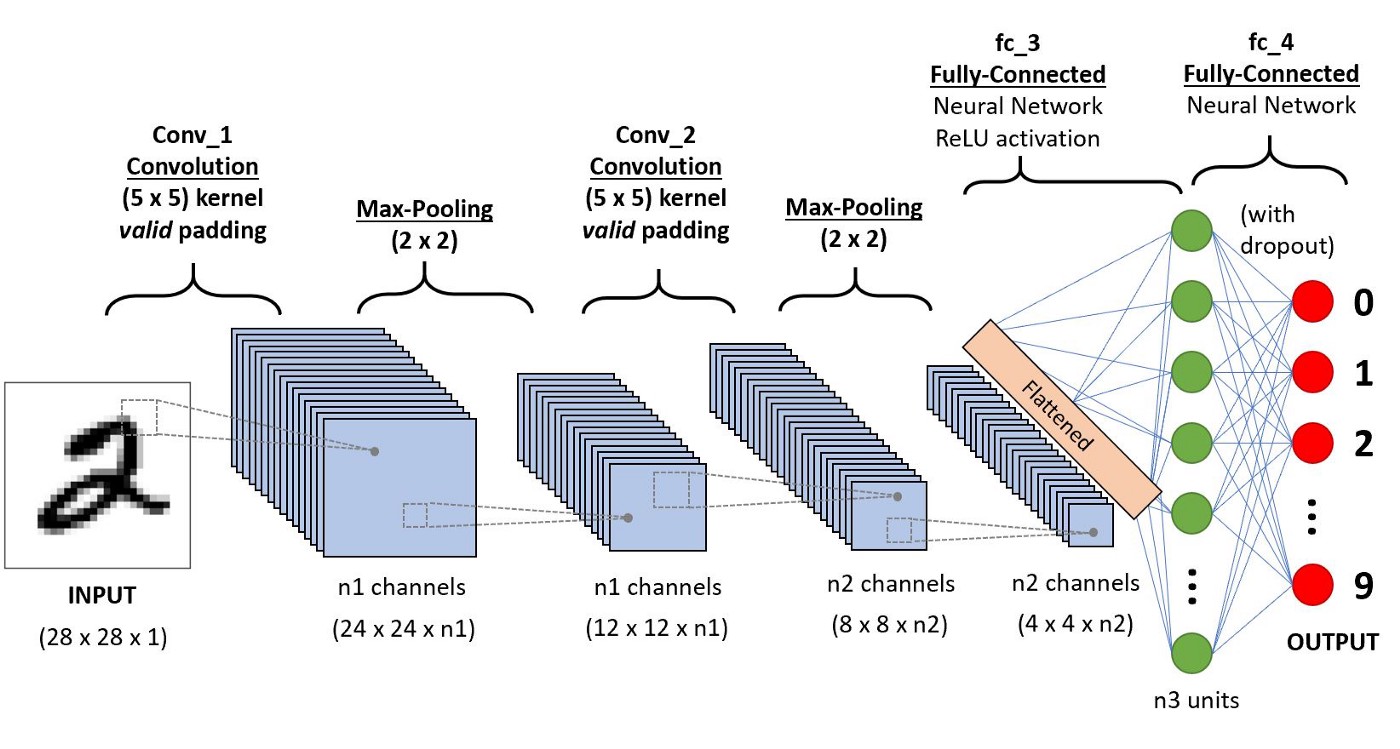

1. Convolutional Neural Networks (CNNs)

CNNs, often referred to as ConvNets, have several layers and are mostly used for object detection and image processing. When it was still known as LeNet in 1988, Yann LeCun created the first CNN. It was used to identify characters such as ZIP codes and numbers.

Structure of CNN

Use of CNN's

The identification of satellite photos, processing of medical images, forecasting of time series, and anomaly detection all make use of CNNs.

2. Generative Adversarial Networks (GANs)

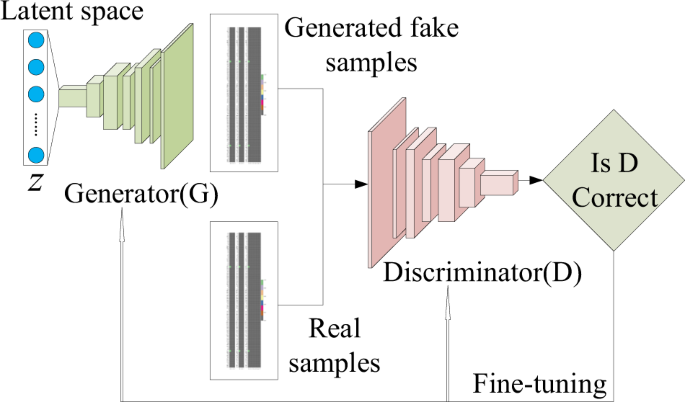

Structure of GAN

Deep learning generative algorithms called GANs to produce new data points that mimic the training data. GAN consists of two parts: a generator that learns to produce false data and a discriminator that absorbs the false data into its learning process.

Over time, GANs have become more often used. They can be employed for dark-matter studies to replicate gravitational lensing and enhance astronomy photos. In order to recreate low-resolution, 2D textures in classic video games in 4K or higher resolutions, video game producers use GANs.

Use of GANs

GANs aid in producing cartoon characters and realistic images, taking pictures of real humans, and rendering 3D objects.

How do GANs function?

- The discriminator gains the ability to discriminate between the real data sample and the generator's bogus data.

- The generator creates bogus data during early training, and the differentiator quickly picks up on the fact that it is untrue.

- In order to update the model, the GAN delivers the outcomes to the discriminator and the generator.

3. Recurrent Neural Networks (RNNs)

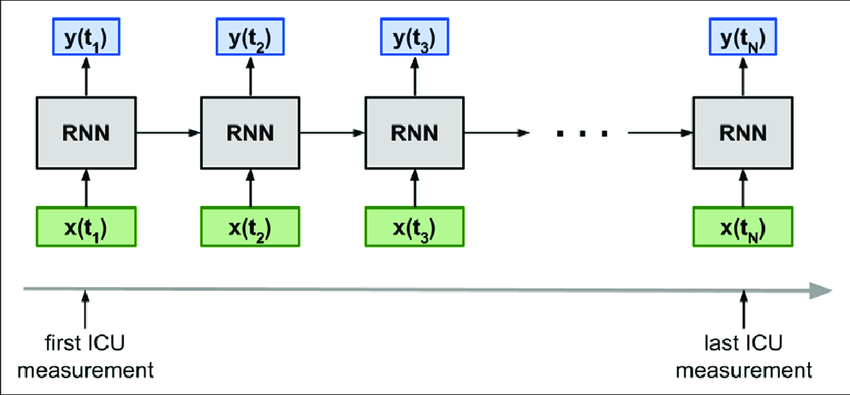

Structure of RNNs

The outcomes from the LSTM can be provided as input to the current stage thanks to the directed cycles formed by connections between RNNs.

The LSTM's output becomes an input for the current phase and, thanks to its internal memory, may remember prior inputs.

Use of RNNs

Natural language processing, time series analysis, handwriting recognition, and computational linguistics are all common applications for RNNs.

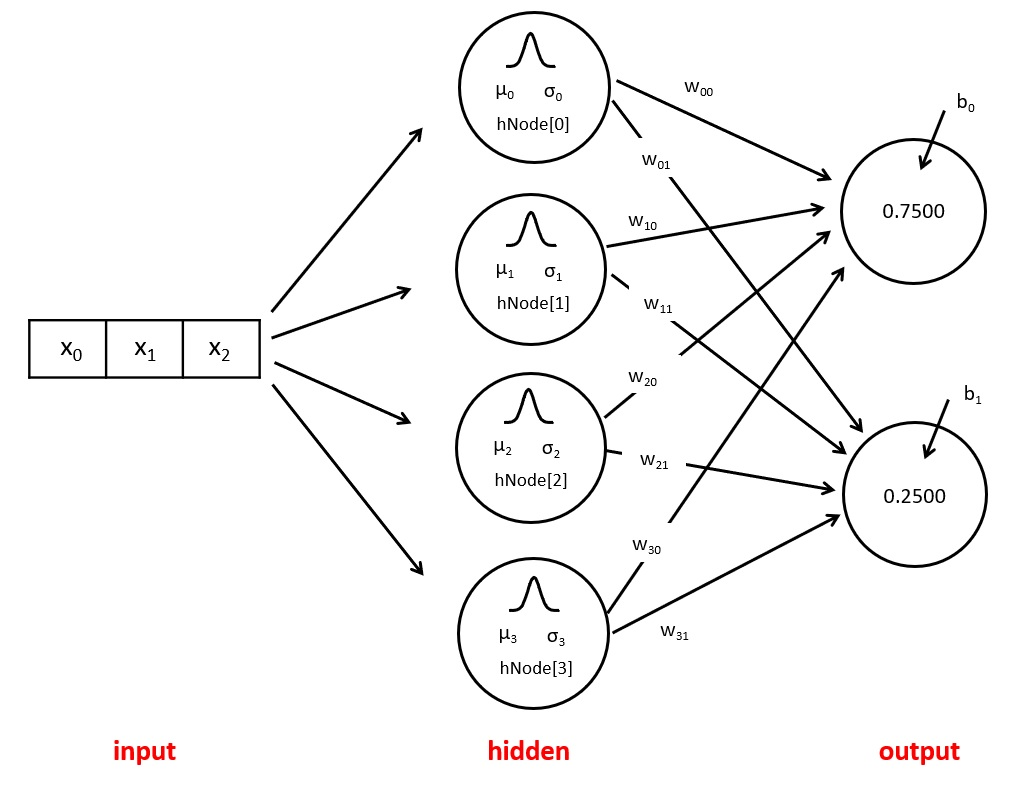

4. Radial Basis Function Networks (RBFNs)

Radial basis functions are a unique class of neural network feedforward networks (RBFNs) that are used as activation functions.

Structure of RFBNs

Use of RBFNs

They are typically used for classifications, regression, as well as time-series prediction and have input data, a hidden layer, and a convolution layer.

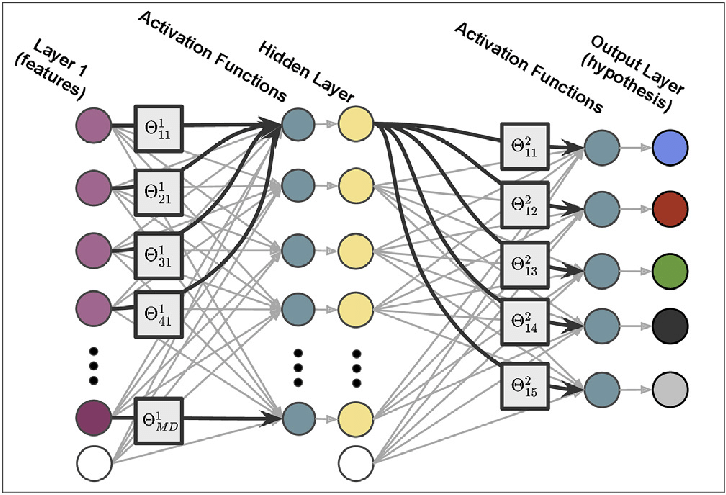

5. Multilayer Perceptrons (MLPs)

Structure of MLP

MLPs are a perfect site to begin studying deep learning technologies. MLPs are a kind of neural network feedforward that contain many layers of activation-function-equipped perceptrons. A completely coupled input data and a hidden layer make up MLPs.

Use of MLPs

They can be used to create speech recognition, picture recognition, and machine translation software since they have the same amount of input and output stages but may have several hidden layers.

Conclusion

In conclusion, deep learning models are a subset of machine learning that uses neural networks with multiple layers to extract complex patterns and features from data. They have revolutionized various fields such as computer vision, natural language processing, and speech recognition.

Some of the most popular deep learning models include convolutional neural networks, recurrent neural networks, and transformer models. Understanding the fundamentals of these models can help you leverage their power to build intelligent systems and solve real-world problems.

Labellerr offers a comprehensive data annotation platform to streamline your model training. Explore our features and request a demo

If you find something insightful, then stay updated for more information!

Simplify Your Data Annotation Workflow With Proven Strategies

.png)